A Squad Of Open-Source LLMs Can Now Beat OpenAI’s Closed-Source GPT-4o

A deep dive into how the Mixture-of-Agents (MoA) model leverages the collective strengths of multiple open-source LLMs and beats

There has been a constant battle between open-source and proprietary AI.

The war has been fierce, so much so that Sam Altman once said on his visit to India that developers can try to build AI like ChatGPT, but they will never succeed in this pursuit.

But Sam has been proven wrong.

A team of researchers recently published a pre-print research article in ArXiv that shows how multiple open-source LLMs can be assembled together to achieve state-of-art performance on multiple LLM evaluation benchmarks, surpassing GPT-4 Omni, the apex model by OpenAI.

They called this model Mixture-of-Agents (MoA).

They showed that a Mixture of Agents consisting of just open-source LLMs scored 65.1% on AlpacaEval 2.0 compared to 57.5% by GPT-4 Omni.

This is highly impressive!

This means that the future of AI is no longer in the hands of big tech building software behind closed doors but is more democratized, transparent, and collaborative.

It also means that developers may no longer focus on training just one model on several trillion tokens, which requires an incredibly costly computing requirement of hundreds of millions of dollars.

Instead, they can harness the collaborative expertise of multiple LLMs with diverse strengths to output impressive results.

Here’s a story where we deep dive into the Mixture-of-Agents model, how it differs from Mixture-of-Experts (MoE), and how it performs in different configurations.

How Does Mixture-of-Agents Work?

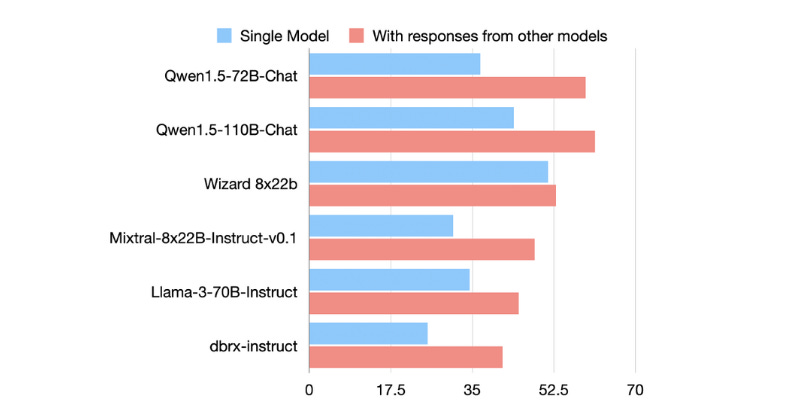

The Mixture-of-Agents (MoA) model works on a phenomenon termed — the Collaborativeness of LLMs.

It is when an LLM generates better responses when given the outputs of other LLMs, even when these other LLMs are less capable by themselves.

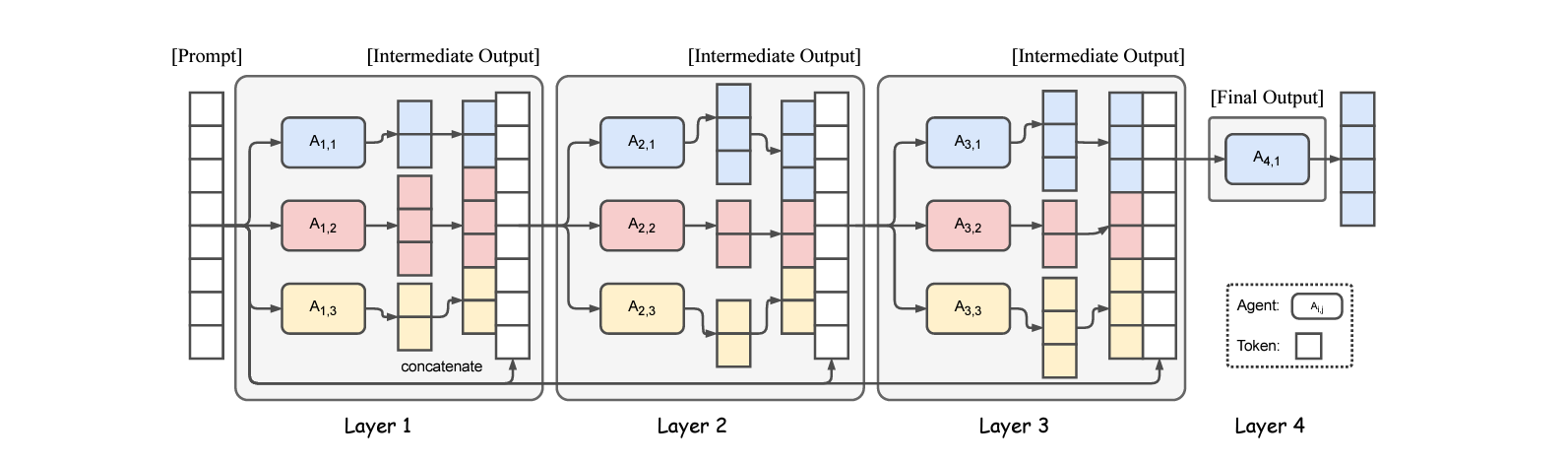

Leveraging this phenomenon, the MoA model is organized into multiple layers, each formed using multiple LLMs (agents).

Each LLM agent in a layer receives input from all the agents in the previous layer and generates an output based on the combined information.

This is how it works in detail.

The LLM agents in the first layer are given an input prompt.

The responses they generate are then passed to the agents in the next layer.

This process is repeated at each layer, where each layer refines the responses generated by the previous.

The final layer aggregates the responses from all agents and results into a single, high-quality output from the overall model.

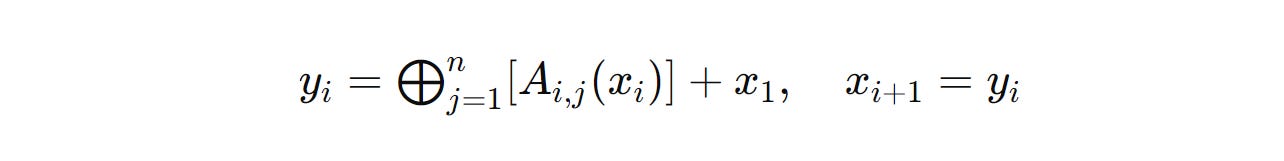

Consider a MoA model consisting of l layers with each layer i containing n LLMs (each denoted by A(i,n)).

For a given input prompt x(1), the output from the i-th layer termed y(i), can be expressed as follows —

where:

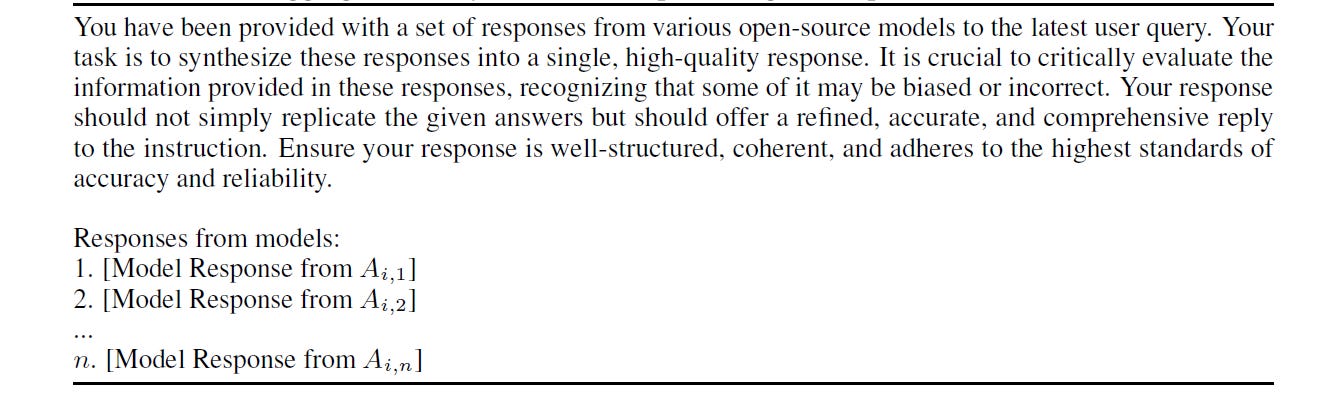

y(i)is the output of thei-th layerA(i,j)is thej-th LLM agent in thei-th layerx(i)is the input to thei-th layer+represents the concatenation of texts⨁represents the application of the Aggregate-and-Synthesize prompt to the outputs (shown in the image below)

Only one LLM agent (A(1,l)) is used in the last (l) layer and this output is the final response from the MoA model used for model evaluation.

The Roles Of Different LLM Agents In Mixture-of-Agents

LLMs used in an MoA layer can be of two different types:

Proposers: The role of these LLMs is to generate diverse responses that may not score very high on performance metrics individually.

Aggregators: The role of these LLMs is to club the responses coming from the Proposers to generate a single high-quality response.

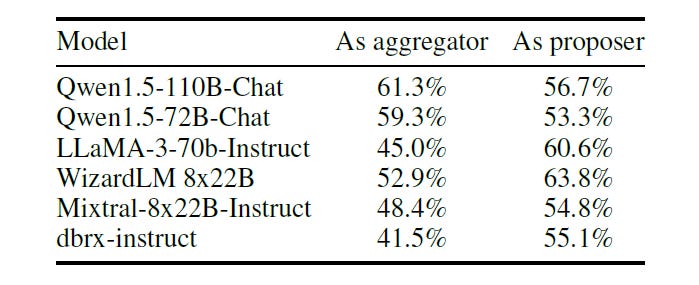

It was noted that Qwen1.5 and LLaMA-3 were effective as both Proposers and Aggregators.

On the other hand, WizardLM and Mixtral-8x22B were better when used as a Proposer.

Wait, But This Looks A Bit Like The Mixture-of-Experts Model

Yes! The MoA model obtains inspiration from the Mixture-of-Experts (MoE) model. But there are considerable differences as well.

Let’s first learn what the Mixture-of-Experts (MoE) model is.

Proposed in 2017, the MoE model combines multiple neural networks called Experts specialising in different skill sets (natural language understanding, code generation, mathematical problem solving and more).

A MoE model consists of multiple layers called MoE layers that, in turn, contain multiple expert networks.

These experts are selectively activated as per the given input, and a Gating Network is responsible for this task.

This network then assigns different weights to the outputs of different experts, controlling their influence on the output combined by the Gating Network.

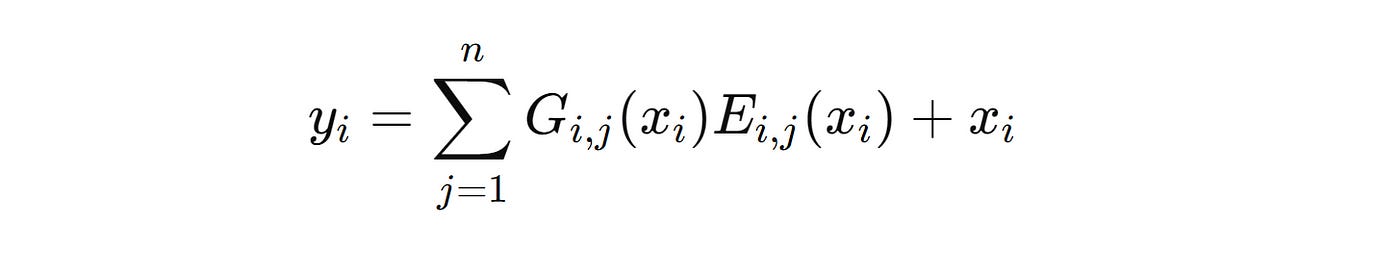

For an MoE layer, its output can be mathematically expressed as follows —

where:

x(i)is the input to thei-th MoE layerG(i,j)is the output from the gating network corresponding to thej-th Expert in thei-th layerE(i,j)is the function computed by thej-th Expert in thei-th layer

Although they seem very similar, when compared with MoE, the MoA model:

uses full-fledged LLMs instead of sub-networks across different layers

aggregates the outputs of multiple agents using prompting (Aggregate-and-Synthesize prompt) instead of a Gating network

eliminates the need for fine-tuning and internal modification to the LLM architecture and can use any LLM on its own as a part

The Performance Of The Mixture-of-Agents Model

The MoA model constructed by the researchers consisted of the following models:

The model structure included three layers with the same set of models in each layer.

Qwen1.5–110B-Chat was used as the aggregator in the final layer.

Two other variants were created along with this, as follows:

MoA w/ GPT-4o: This variant used GPT-4o, as the aggregator in the final layer

MoA-Lite: This variant used two layers instead of three, with Qwen1.5–72B-Chat as the aggregator in the final layer. Its focus was on reducing the overall cost associated with using the model.

The performance of these architectures was evaluated using three standard benchmarks —

The results of these are shown below.

Performance On AlpacaEval 2.0

During the evaluation, the ‘Win’ metric measures the preference rate of a model’s response compared to that of the GPT-4 (gpt-4–1106-preview), with a GPT-4-based evaluator.

Another metric — ‘Length-controlled (LC) Win’, ensures a fairer comparison by adjusting each model’s response length to neutralize length bias.

The MoA model achieved an impressive 8.2% absolute LC Win improvement over GPT-4o. Even the cost-effective MoA-Lite variant outperformed GPT-4o by 1.8% on this metric.

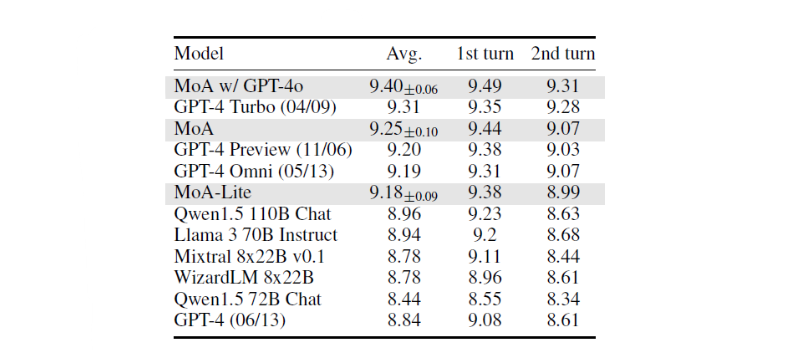

Performance On MT-Bench

This benchmark uses three metrics that score the models out of 10.

Avg: the overall average score obtained by a model

1st Turn: the score for the initial response

2nd Turn: the score for the follow-up response in a conversation

MoA and MoA-Lite showed strong competitive performance compared to GPT-4o on this benchmark.

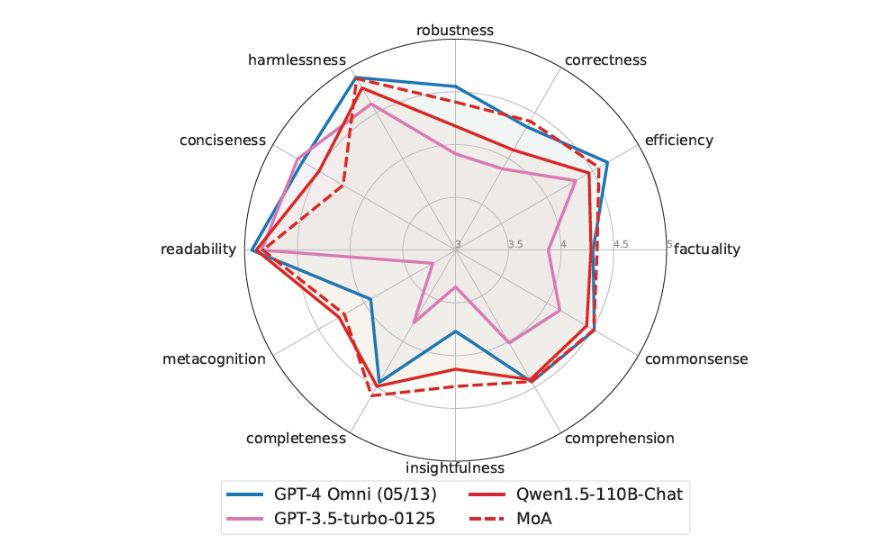

Performance On FLASK

This benchmark provides a more fine-grained performance evaluation of the models.

MoA excelled on many metrics of FLASK compared to GPT-4o except Conciseness, as its outputs were more verbose.

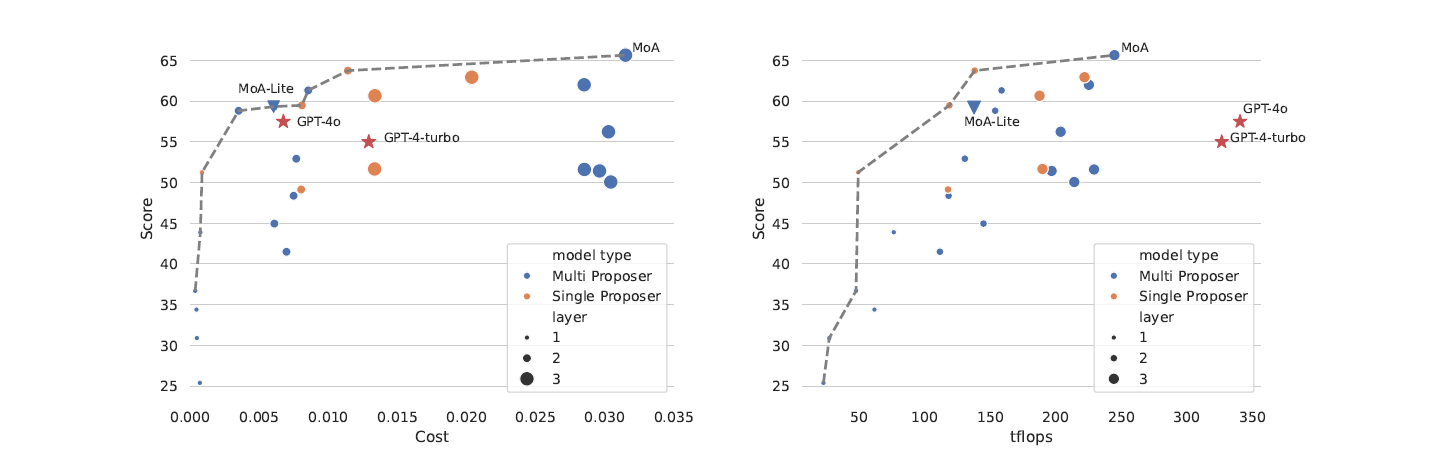

Cost & Computational Efficiency

Regarding cost efficiency, MoA-Lite matched GPT-4o’s cost while achieving a high response quality (higher LC win rate).

Otherwise, MoA was the best configuration for achieving the highest LC win rate regardless of cost.

This finding was contrary to GPT-4 Turbo and GPT-4o, which were not cost-optimal but more expensive than MoA approaches of the same LC win rate.

It was also found that MoA and MoA-Lite utilized their computational resources more effectively to achieve high LC win rates when compared to GPT-4 Turbo and GPT-4o.

What are your thoughts on this novel methodology, and how has your overall experience been using open-source LLMs in your projects?

Let me know in the comments below!