Build A Vector Database From Scratch To Understand RAG In Depth

A hands-on lesson on building a Vector Database and performing RAG over it

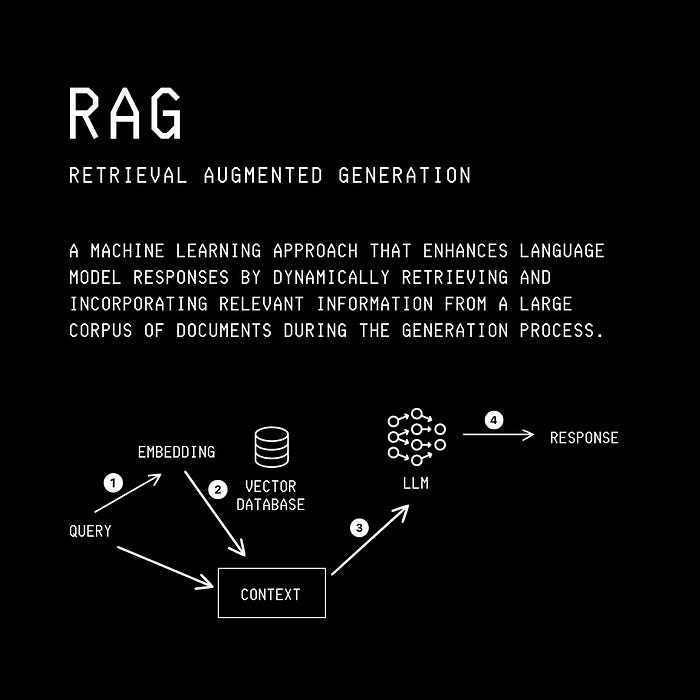

RAG or Retrieval-Augmented Generation, first published in 2020, has now become an industry standard.

The same goes for Vector Databases used in the RAG workflow.

If you’re new to it, or want to understand it in depth, here’s a lesson where we build a Vector database to perform RAG on it, all from scratch.

What Is RAG?

LLMs come with limited context, which is based on their training data.

To ensure that LLMs can answer queries using up-to-date data, we can fine-tune them with relevant datasets. This improves an LLM’s internal knowledge.

There’s a catch here, though.

Although fine-tuning will improve an LLM’s performance in staying up-to-date, it must be done repeatedly as the data becomes outdated.

RAG (Retrieval-Augmented Generation), on the other hand, is a technique that lets LLMs access up-to-date and trustworthy information by connecting them to external knowledge sources.

This allows LLMs to dynamically generate responses based on external, verifiable data sources rather than just their internal knowledge.

The terms in RAG mean the following:

Retrieval: the process of retrieving relevant information/ documents from a knowledge base/ specific private datasets.

Augmentation: the process where the retrieved information is added to the input context of an LLM

Generation: the process of the LLM generating a response based on the original query and its augmented context.

A typical RAG pipeline looks like the following.

The process takes place as follows:

A user queries an LLM

The user query is passed through an Embedding model that converts the query into an Embedding (dense vector).

This vectorized query (Query embedding) is used to search a Vector Database. This search returns the top-K most relevant documents from the database that could be used to answer the query.

The retrieved documents are combined with the original user query and passed to the LLM as context.

The LLM generates a response and returns it to the user.

Note how this process is different from the usual workflow where a user queries an LLM and directly gets a response based on the LLM’s internal information.

The Vector database is a crucial component of the RAG workflow.

How about we build one from scratch so that we can understand everything better?

Building A Vector Database From The Ground Up

(All of the code below is written in a Google Colab notebook.)

We would like our Vector database to have the following minimal functionalities:

Insertion of new vectors

Viewing all vectors

Searching for and returning similar vectors to a query vector

Let’s implement these, step by step.

For simplicity, we will store the vectors in an array.

class VectorDatabase:

def __init__(self):

# Store all vectors in an array

self.vectors = []Next, we add a method that lets us add vectors/ embeddings (along with an ID and associated metadata) to it.

class VectorDatabase:

def __init__(self):

# Store all vectors in an array

self.vectors = []

# Add vector to database

def add_vector(self, vec_id, vector, metadata=None):

record = {

"id": vec_id,

"vector": np.array(vector, dtype=np.float32),

"metadata": metadata

}

self.vectors.append(record)We then add a new method to the class to view all the stored vectors as follows.

class VectorDatabase:

def __init__(self):

# Store all vectors in an array

self.vectors = []

# Add vector to database

def add_vector(self, vec_id, vector, metadata=None):

record = {

"id": vec_id,

"vector": np.array(vector, dtype=np.float32),

"metadata": metadata

}

self.vectors.append(record)

# Retreive all vectors from database

def get_all_vectors(self):

return self.vectorsHere comes the interesting part.

Our Vector database will use the cosine similarity between vectors to find the most similar stored vectors to a query vector.

Cosine similarity is the cosine of the angle between the vectors, i.e., it is the dot product of the vectors divided by the product of their lengths.

The cosine similarity between two vectors A and B can be calculated using the following formula:

where:

A⋅Bis the dot product of vectors A and B||A||and||B||are the magnitudes (length) of vectors A and B, respectively

Cosine similarity ignores vector magnitudes and focuses on their direction in space.

It ranges from the values of 1 to -1.

If two vectors point in the same direction, the angle between them is small, and the cosine is close to 1 (maximum similarity/ identical meaning for sentence embeddings).

If they are orthogonal (90°), the cosine is 0 (no similarity).

If they point in opposite directions, cosine is -1 (maximum disimilarity/ opposite meaning for sentence embeddings).

Let’s define a private method in our Vector database to calculate the cosine similarity.

import numpy as np

class VectorDatabase:

def __init__(self):

# Store all vectors in an array

self.vectors = []

# Add vector to database

def add_vector(self, vec_id, vector, metadata=None):

record = {

"id": vec_id,

"vector": np.array(vector, dtype=np.float32),

"metadata": metadata

}

self.vectors.append(record)

# Retreive all vectors from database

def get_all_vectors(self):

return self.vectors

# Calculate consine similarity between vectors

def _cosine_similarity(self, vec_a, vec_b):

# Calculate dot product

dot_product = np.dot(vec_a, vec_b)

# Calculate the magnitude of vector A

norm_a = np.linalg.norm(vec_a)

# Calculate the magnitude of vector B

norm_b = np.linalg.norm(vec_b)

cos_sim = dot_product / (norm_a * norm_b + 1e-8) # small epsilon to avoid division by zero

return cos_simThis method can search for similar vectors to the query vector using another method we will create next.

import numpy as np

class VectorDatabase:

def __init__(self):

# Store all vectors in an array

self.vectors = []

# Add vector to database

def add_vector(self, vec_id, vector, metadata=None):

record = {

"id": vec_id,

"vector": np.array(vector, dtype=np.float32),

"metadata": metadata

}

self.vectors.append(record)

# Retreive all vectors from database

def get_all_vectors(self):

return self.vectors

# Calculate consine similarity between vectors

def _cosine_similarity(self, vec_a, vec_b):

# Calculate dot product

dot_product = np.dot(vec_a, vec_b)

# Calculate the magnitude of vector A

norm_a = np.linalg.norm(vec_a)

# Calculate the magnitude of vector B

norm_b = np.linalg.norm(vec_b)

cos_sim = dot_product / (norm_a * norm_b + 1e-8) # small epsilon to avoid division by zero

return cos_sim

# Search for similar vectors and return the top_k results

def search(self, query_vector, top_k = 3):

query_vector = np.array(query_vector, dtype = np.float32)

# Stores the top_k results

results = []

for record in self.vectors:

sim = self._cosine_similarity(query_vector, record["vector"])

results.append({

"id": record["id"],

"similarity": sim,

"metadata": record["metadata"]

})

results.sort(key=lambda x: x["similarity"], reverse=True)

return results[:top_k]It’s time to start using our Vector database.

db = VectorDatabase()Let’s start by adding some vectors to it.

np.random.seed(10)

db.add_vector("vec1", np.random.rand(5), metadata = {"text": "First item"})

db.add_vector("vec2", np.random.rand(5), metadata = {"text": "Second item"})

db.add_vector("vec3", np.random.rand(5), metadata = {"text": "Third item"})

db.add_vector("vec4", np.random.rand(5), metadata = {"text": "Fourth item"})

db.add_vector("vec5", np.random.rand(5), metadata = {"text": "Fifth item"})We can find all the vectors stored in it as follows:

db.get_all_vectors()[{'id': 'vec1',

'vector': array([0.77132064, 0.02075195, 0.6336482 , 0.74880385, 0.49850702],

dtype=float32),

'metadata': {'text': 'First item'}},

{'id': 'vec2',

'vector': array([0.22479665, 0.19806287, 0.7605307 , 0.16911083, 0.08833981],

dtype=float32),

'metadata': {'text': 'Second item'}},

{'id': 'vec3',

'vector': array([0.68535984, 0.95339334, 0.00394827, 0.51219225, 0.81262094],

dtype=float32),

'metadata': {'text': 'Third item'}},

{'id': 'vec4',

'vector': array([0.61252606, 0.7217553 , 0.29187608, 0.91777414, 0.71457577],

dtype=float32),

'metadata': {'text': 'Fourth item'}},

{'id': 'vec5',

'vector': array([0.54254436, 0.14217004, 0.37334076, 0.6741336 , 0.44183317],

dtype=float32),

'metadata': {'text': 'Fifth item'}}]We can create a query vector and find the top 3 similar vectors to it as follows.

query_vector = np.random.rand(5)

print("Query vector: ", query_vector)Query vector: [0.43401399 0.61776698 0.51313824 0.65039718 0.60103895]results = db.search(query_vector, top_k = 3)

print(f"Query vector: {query_vector}\n")

for res in results:

print(f"ID: {res['id']} | Similarity: {res['similarity']:.2f} | Metadata: {res['metadata']}")Query vector: [0.43401399 0.61776698 0.51313824 0.65039718 0.60103895]

ID: vec4 | Similarity: 0.97 | Metadata: {'text': 'Fourth item'}

ID: vec5 | Similarity: 0.91 | Metadata: {'text': 'Fifth item'}

ID: vec3 | Similarity: 0.89 | Metadata: {'text': 'Third item'}Working With Natural Language In Our Vector Database

Instead of manually creating embeddings, we will use an embedding model to help us do this.

We install the sentence_transformers package for this as follows:

!pip install sentence_transformersAnd then use the all-MiniLM-L6-v2 embedding model from this package.

(You might prefer using text-embedding-3-small and text-embedding-3-large, the newest and most performant embedding models from OpenAI for this purpose, when building software for production.)

from sentence_transformers import SentenceTransformer

model = SentenceTransformer('all-MiniLM-L6-v2')This is a list of sentences that I will store in the vector database.

sentences = [

"Kunal's cat is playing with wool.",

"Ashish was born on 1st September 1700.",

"Dogs are loyal animals.",

"I love eating pizza but only on Fridays.",

"Manika was born in Bhopal."

]This is done as follows:

# Reinstantiate database

db = VectorDatabase()

for idx, sentence in enumerate(sentences):

# Create sentence embedding

embedding = model.encode(sentence)

# Add sentence embedding to the database

db.add_vector(vec_id=f"sent_{idx}", vector=embedding, metadata={"sentence": sentence})We can query our database in natural language to see if it works well.

# Query with a sentence

query = "When is Ashish's birthday?"

query_vec = model.encode(query)

# Search the database for similar vectors

results = db.search(query_vec, top_k = 3)

# Print the results

print(f"Query: \"{query}\"\n")

for res in results:

print(f"Similar Sentence: {res['metadata']['sentence']}")

print(f"Cosine Similarity Score: {res['similarity']:.2f}\n")Here are the results that tell us that we can correctly retrieve the top 3 vectors related to our query from our database.

Query: "When is Ashish's birthday?"

Similar Sentence: Ashish was born on 1st September 1700.

Cosine Similarity Score: 0.83

Similar Sentence: Manika was born in Bhopal.

Cosine Similarity Score: 0.40

Similar Sentence: Kunal's cat is playing with wool.

Cosine Similarity Score: 0.16Our vector database is now ready for some RAG!

Performing RAG Over Our Vector Database

We write a function that, for a given query, uses our vector database to find relevant context and then uses an LLM to generate an appropriate response.

!pip install openaifrom openai import OpenAI

def generate_rag_response(query):

client = OpenAI()

# Obtain relevant context from vector database

context = ""

query_embedding = model.encode(query)

results = db.search(query_embedding, top_k = 3)

for res in results:

context += f"{res['metadata']['sentence']}\n"

completion = client.chat.completions.create(

model = "gpt-4o-mini",

messages=[

{"role": "system", "content": "You are a helpful assistant. Use the provided context to answer accurately."},

{"role": "user", "content": f"Context:\n{context}\n\nQuestion: {query}"}

],

)

response = completion.choices[0].message.content

return responseWe see that this works very well for our query.

answer = generate_rag_response("When is Ashish's birthday?")

print(answer)Ashish's birthday is on 1st September.Want to see what happens when we ask an LLM this question without the RAG workflow?

A response generation attempt without RAG returns the following response.

def generate_response_without_rag(query):

client = OpenAI()

completion = client.chat.completions.create(

model = "gpt-4o-mini",

messages=[

{"role": "system", "content": "You are a helpful assistant. Use the provided context to answer accurately."},

{"role": "user", "content": f"{query}"}

],

)

response = completion.choices[0].message.content

return response

no_rag_answer = generate_response_without_rag("When is Ashish's birthday?")

print(no_rag_answer)I'm sorry, but I don’t have any information regarding Ashish's birthday.That’s everything for this lesson!