Top 4 Decoding Strategies In LLMs Explained Simply

Learn all about the magical statistics that make text generation with LLMs possible.

It’s magical how LLMs generate text. What hides beneath this magic is some cool statistics that make text generation possible.

LLMs are next-token predictors for a given context. They do not understand English (or any other human language). Rather, they work with numbers, transforming them based on probability.

Consider the following example when using GPT-2.

Given the context AI is such an important, it is first converted into tokens by a Tokenizer using an algorithm called Byte Pair Encoding (BPE).

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

# Set device

device = torch.device(”cuda” if torch.cuda.is_available() else “cpu”)

# Load GPT-2 model

model = AutoModelForCausalLM.from_pretrained(”gpt2”)

model.eval()

# Load GPT-2 tokenizer

tokenizer = AutoTokenizer.from_pretrained(”gpt2”)

# Context

context = “AI is such an important”

# Tokenized context

tokens = tokenizer.tokenize(context)

print(tokens)This context, broken down into tokens, is as follows:

[’AI’, ‘Ġis’, ‘Ġsuch’, ‘Ġan’, ‘Ġimportant’]An LLM’s vocabulary is a mapping between integer IDs and different tokens. Each token gets an integer ID based on this vocabulary.

For the above tokens, their integer IDs are as follows:

# Encode tokens to integer IDs

encoded_tokens = tokenizer.encode(context)

print(encoded_tokens)[20185, 318, 884, 281, 1593]Given these integers, an LLM transforms them through multiple mechanisms internally and outputs a sequence of logits.

# Convert integer IDs to tensor and pass through the model

input_ids = torch.tensor([encoded_tokens])

with torch.no_grad():

outputs = model(input_ids)

logits = outputs.logits

# Get logits for the last position

last_logits = logits[:, -1, :] These logits are then converted into probabilities using the softmax function.

# Convert logits to probabilities using softmax

probs = torch.softmax(last_logits, dim=-1)

# Show top 10 predictions

top_probs, top_ids = torch.topk(probs, k=10, dim=-1)

top_probs = top_probs[0].tolist()

top_ids = top_ids[0].tolist()

top_tokens = [tokenizer.decode([i]) for i in top_ids]

print(”Top 10 Next Tokens:\n”)

for token, prob in zip(top_tokens, top_probs):

print(f”{token}: {prob:.4f}”)The top 10 next tokens given the context AI is such an important with their corresponding probabilities are shown below.

Top 10 Next Tokens:

part: 0.1391

player: 0.0460

component: 0.0330

tool: 0.0319

piece: 0.0260

and: 0.0237

step: 0.0220

element: 0.0182

factor: 0.0163

technology: 0.0140Given these tokens and their probabilities, one can use various decoding strategies to generate text. Let’s start with the simplest one of them.

Before we move forward, I want to introduce you to my new book called ‘LLMs In 100 Images’.

It is a collection of 100 easy-to-follow visuals that describe the most important concepts you need to master LLMs today.

Grab your copy today at a special early bird discount using this link.

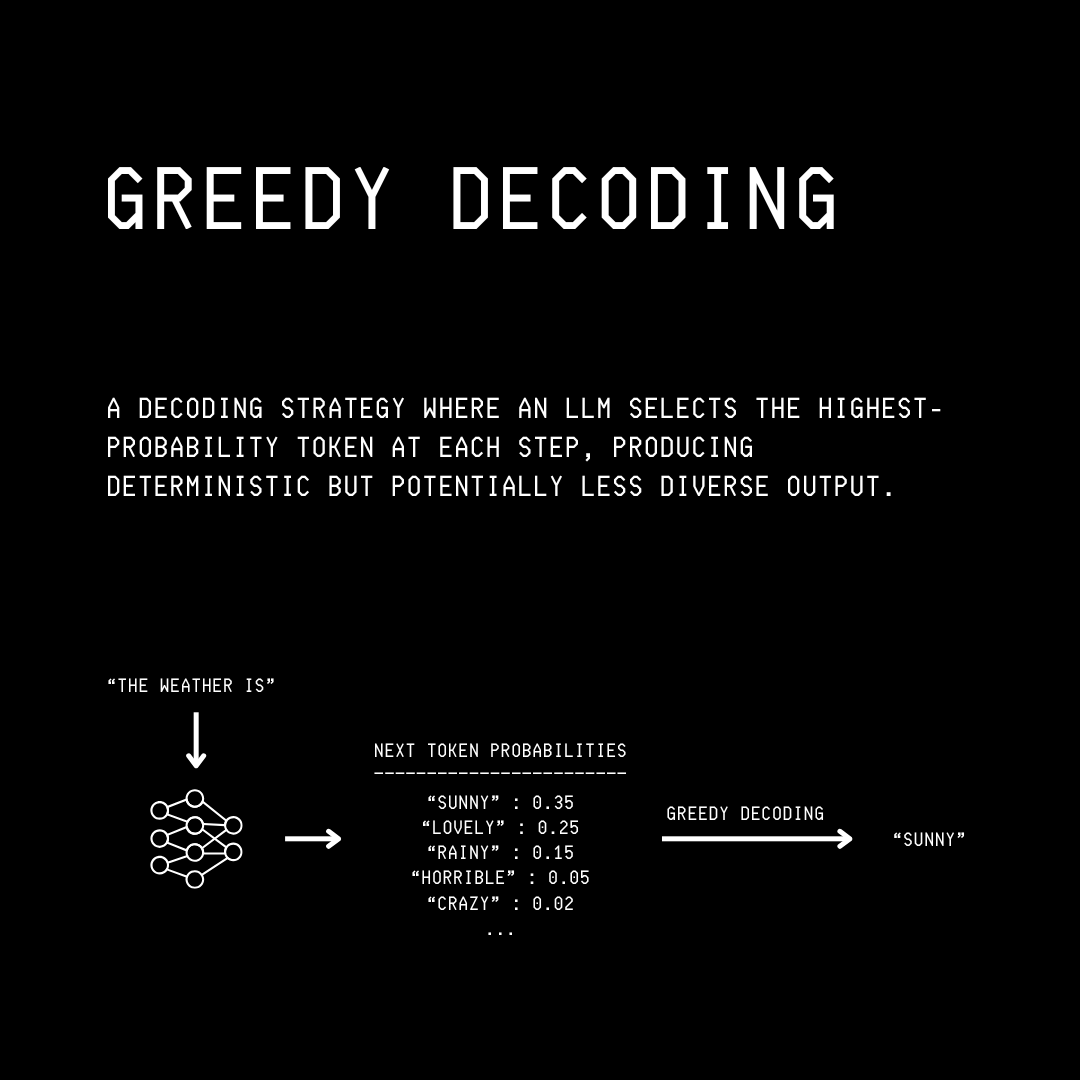

1. Greedy Decoding

With Greedy decoding, at each step of next-token generation, the token with the highest probability is chosen and appended to the sequence of previously generated tokens.

This process is repeated until a stop condition is met, which could be either reaching an end-of-sentence token or the maximum generation length.

This method is fast and easy to implement, but could result in generic text since it is deterministic (non-random), and creative word choices aren’t being picked by it.

The steps of Greedy decoding can be written in Python as follows.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

# Load model and tokenizer

device = torch.device(”cuda” if torch.cuda.is_available() else “cpu”)

model_name = “gpt2”

model = AutoModelForCausalLM.from_pretrained(model_name).to(device)

model.eval()

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Context

context = “The weather is”

# Encode the context

inputs = tokenizer(context, return_tensors=”pt”).to(device)

# Max number of tokens to generate

max_gen_tokens = 5

# Generate tokens

with torch.no_grad():

for _ in range(max_gen_tokens):

outputs = model(**inputs)

logits = outputs.logits

# Get the last token’s logits

last_token_logits = logits[:, -1, :]

# Get the index of the token with the highest probability (Greedy)

next_token_id = torch.argmax(last_token_logits, dim=-1, keepdim=True)

# Append the token to input_ids

inputs[”input_ids”] = torch.cat([inputs[”input_ids”], next_token_id], dim=-1)

# Decode the complete sequence

decoded_text = tokenizer.decode(inputs[”input_ids”][0])

print(”Greedy decoded text:”)

print(decoded_text)2. Beam Search

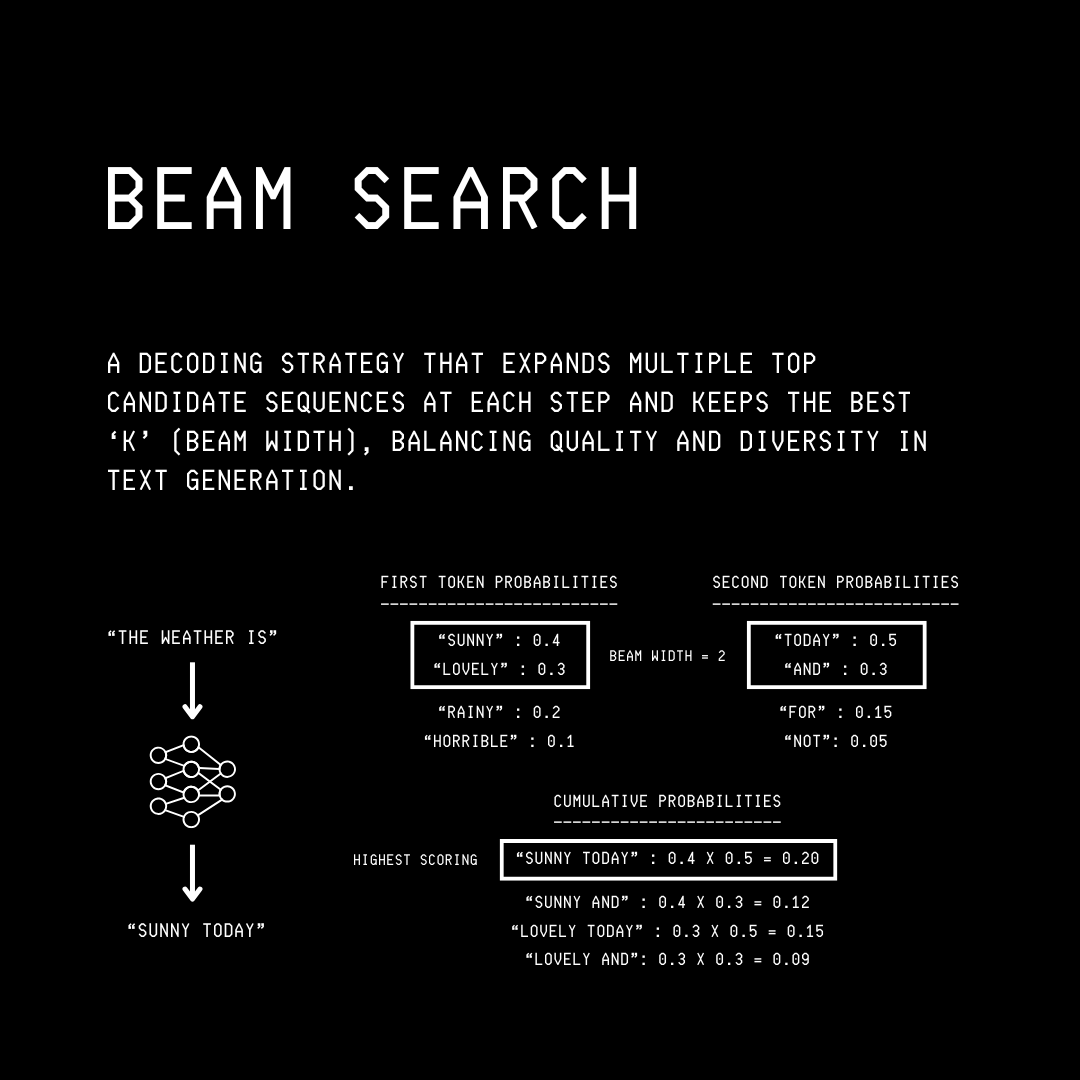

Beam search is another deterministic decoding algorithm that, unlike greedy decoding, looks ahead more intelligently when picking tokens.

Instead of selecting only the top choice at each step, beam search keeps track of the top-B most likely text sequences (called Beams).

At each step, it expands all beams, scores the resulting sequences, and then selects the top-B beams overall.

Let’s learn it better with an example where the context is The weather is.

The next token probabilities for the top four tokens, sorted in descending order, are:

sunny:0.4lovely:0.3rainy:0.2horrible:0.1

With a pre-decided beam width of 2, we select the top two sequences as follows:

The weather is sunny (probability of

0.4)The weather is lovely (probability of

0.3)

For the next token from these sequences, the probabilities are as follows:

today:0.5and:0.3for:0.15not:0.05

(Note that these probability distributions could be different for each of the above sequences, but I’ve kept them the same to make the example easier.)

We again select the top two tokens based on the beam width of 2, i.e. today and and.

Then we expand each beam with these possible next tokens and multiply their probabilities as follows:

sunny today:0.4 × 0.5 = 0.20sunny and:0.4 × 0.3 = 0.12lovely today:0.3 × 0.5 = 0.15lovely and:0.3 × 0.3 = 0.09

Finally, we pick the top two sequences again. Among these, the highest-scoring sequence overall is sunny today, which becomes the selected output.

Beam search can be used as a decoding strategy for LLMs using the generate method from Hugging Face’s transformers library, as shown below.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

# Load model and tokenizer

device = torch.device(”cuda” if torch.cuda.is_available() else “cpu”)

model_name = “gpt2”

model = AutoModelForCausalLM.from_pretrained(model_name).to(device)

model.eval()

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Context

context = “The weather is”

# Encode the context

inputs = tokenizer(context, return_tensors=”pt”).to(device)

# Beam search parameters

num_beams = 5 # Number of beams to use

max_gen_tokens = 5 # Number of new tokens to generate

# Beam search generation

with torch.no_grad():

outputs = model.generate(

**inputs,

max_new_tokens = max_gen_tokens,

num_beams = num_beams)

# Decode the generated sequence

decoded_text = tokenizer.decode(outputs[0])

print(”Beam search decoded text:”)

print(decoded_text)It’s now time to learn about Top-P sampling and Top-K sampling.

These methods are non-deterministic, which means that different runs with the same input can produce different outputs. This makes the outputs more diverse and creative.

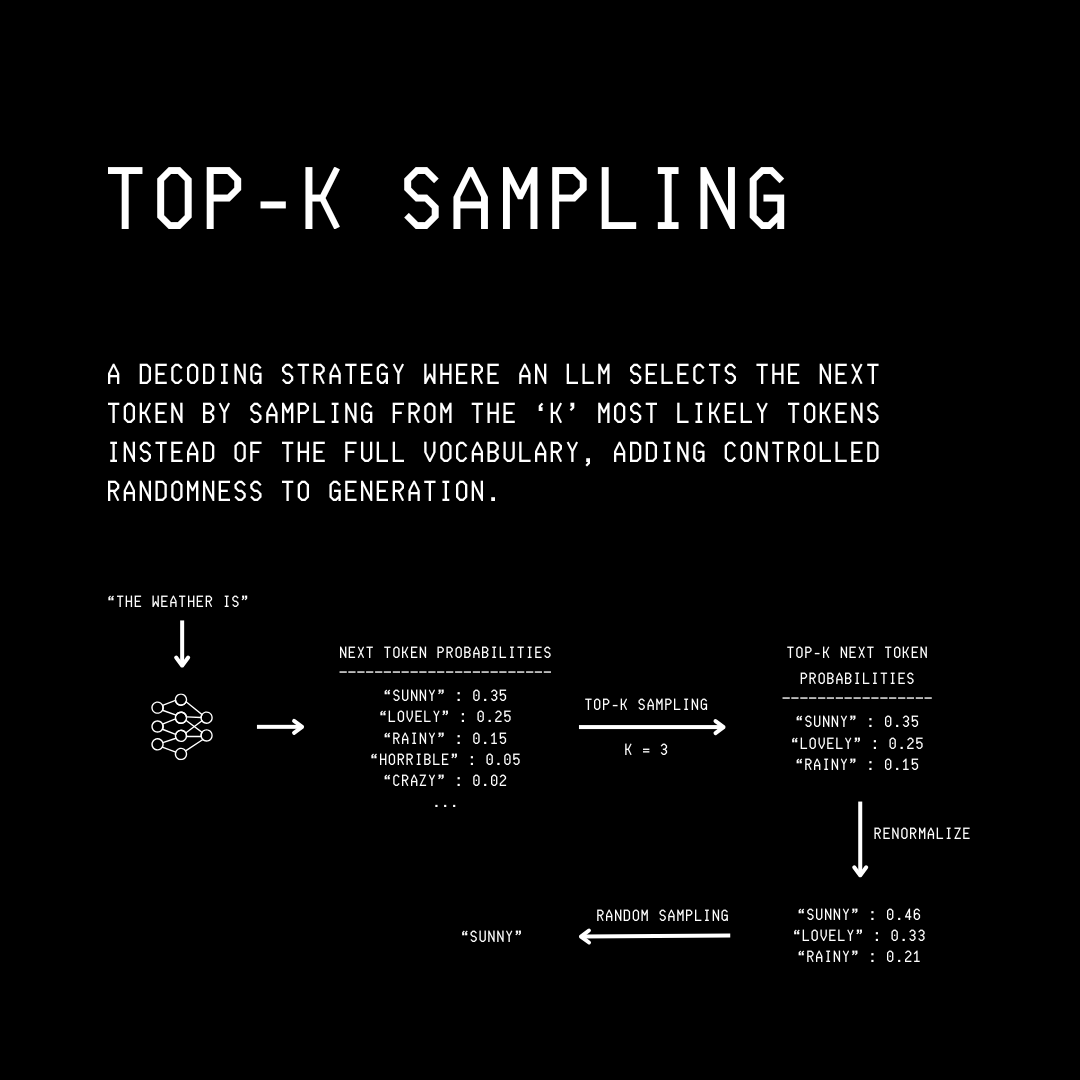

3. Top-K Sampling

When using this decoding strategy, the tokens are first sorted in descending order according to their next-token probabilities.

Then, the top-k tokens are selected from this list.

Next, the probabilities of these top-k tokens are renormalized using the softmax function so that they sum to 1.

Finally, a token is randomly picked from this set of top-k tokens.

Check out the example below, where we set k = 3. For the context The weather is, this leads to choosing the following three tokens with the next-highest next-token probabilities:

Sunny:0.35Lovely:0.25Rainy:0.15

On renormalizing using the softmax function, these probabilities become:

Sunny:0.46Lovely:0.33Rainy:0.21

One token out of these is then randomly chosen, which is Sunny in this case.

The steps of Top-K sampling can be written in Python as follows.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

# Load model and tokenizer

device = torch.device(”cuda” if torch.cuda.is_available() else “cpu”)

model_name = “gpt2”

model = AutoModelForCausalLM.from_pretrained(model_name).to(device)

model.eval()

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Context

context = “The weather is”

# Encode the context

encoded_tokens = tokenizer.encode(context)

# Start with the initial encoded tokens

generated_tokens = encoded_tokens[:]

# Number of tokens to generate

max_gen_tokens = 5

# Top-k value

top_k = 3

for _ in range(max_gen_tokens):

# Convert current sequence to tensor

input_ids = torch.tensor([generated_tokens]).to(device)

# Forward pass through the model

with torch.no_grad():

outputs = model(input_ids)

logits = outputs.logits

# Get logits for the last position

last_token_logits = logits[:, -1, :]

# Top-k filtering (Keep top-k tokens, set the rest to -inf)

topk_logits, topk_indices = torch.topk(last_token_logits, k=top_k, dim=-1)

# Convert to probabilities using softmax (Softmax converts -inf to probability 0)

probs = torch.softmax(topk_logits, dim=-1)

# Sample one token from the top-k distribution

sampled_index = torch.multinomial(probs, num_samples=1)

next_token_id = topk_indices[0, sampled_index].item()

# Append the sampled token

generated_tokens.append(next_token_id)

# Decode the complete sequence of tokens

decoded_text = tokenizer.decode(generated_tokens)

print(”Top-k sampled text:”)

print(decoded_text)Next comes Top-P or Nucleus sampling.

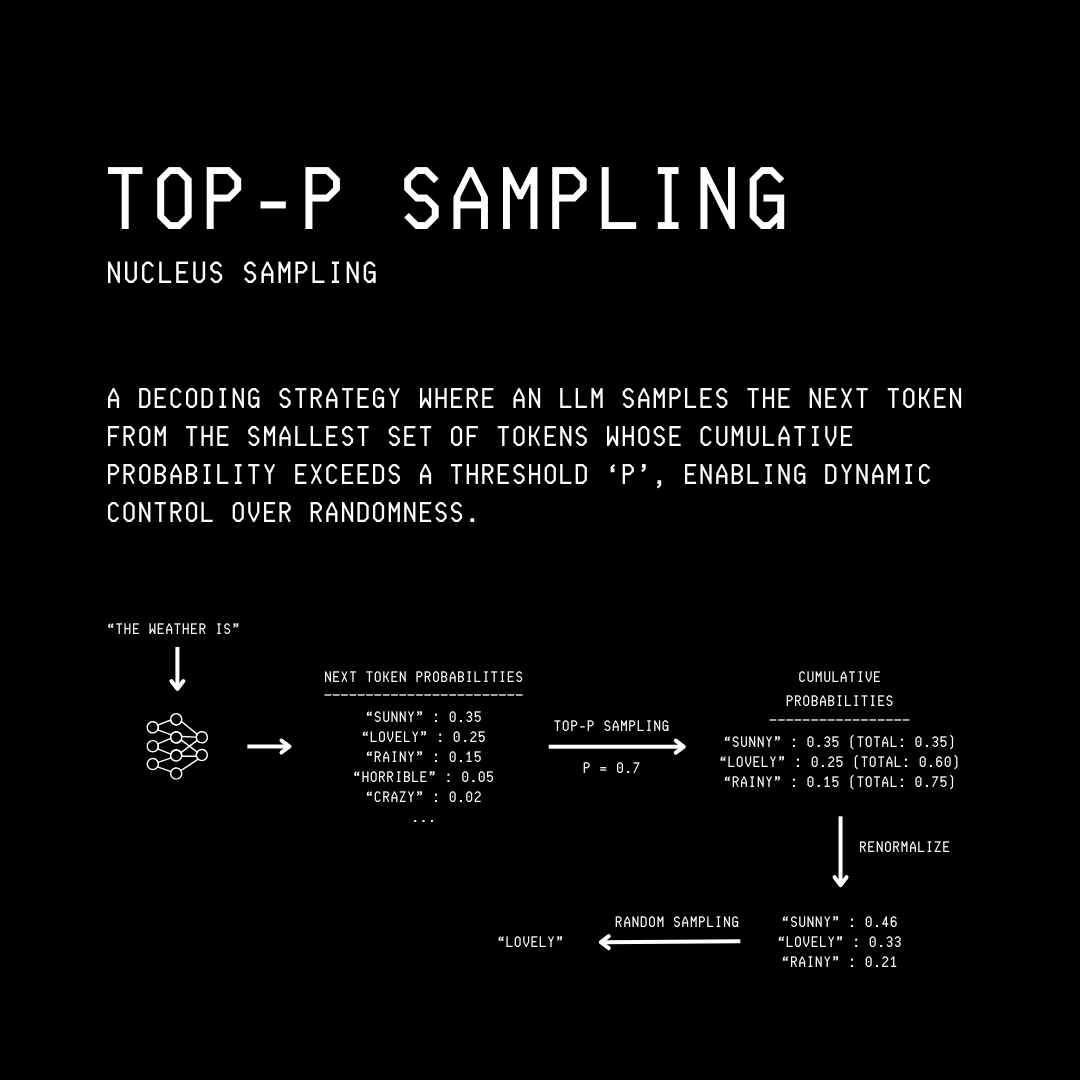

4. Top-P Sampling/ Nucleus Sampling

With Top-P sampling, the tokens are first sorted in descending order according to their next-token probabilities.

Then, the smallest set of tokens whose cumulative probability exceeds our pre-defined p threshold is chosen. This set is called the Nucleus.

Next, the probabilities of this set are renormalized so that they sum to 1.

Finally, a token is randomly picked from this set.

Check out the example below, where we set p = 0.7. For the context The weather is, consider the following three tokens with their corresponding next-highest next-token probabilities:

Sunny:0.35Lovely:0.25Rainy:0.15

Based on these, the following cumulative probabilities are obtained:

For a set with just the first token

sunny, the cumulative probability is0.35which is smaller than ourpthreshold of0.7so we include the next token with the highest probability in the set.For a set with

sunnyandlovely, the cumulative probability is0.35 + 0.25 = 0.60, which is still smaller than ourpthreshold of0.7so we again include the next token with the highest probability in the set.For a set with

sunny,rainy, andlovely, the cumulative probability is0.35 + 0.25 + 0.15 = 0.75, which is larger than ourpthreshold of0.7so we stop here. This set of three tokens is our nucleus.

Next, we renormalize the probabilities of these three tokens in the nucleus as follows:

sunny:0.46lovely:0.33rainy:0.21

Following this, a token is randomly picked. This token is lovely in our case.

The steps of Top-P sampling can be written in Python as follows.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

# Load model and tokenizer

device = torch.device(”cuda” if torch.cuda.is_available() else “cpu”)

model_name = “gpt2”

model = AutoModelForCausalLM.from_pretrained(model_name).to(device)

model.eval()

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Context

context = “The weather is”

# Encode the context

encoded_tokens = tokenizer.encode(context)

# Start with the initial encoded tokens

generated_tokens = encoded_tokens[:]

# Number of tokens to generate

max_gen_tokens = 5

# Top-p value

top_p = 0.9

for _ in range(max_gen_tokens):

# Convert current sequence to tensor

input_ids = torch.tensor([generated_tokens]).to(device)

# Forward pass through the model

with torch.no_grad():

outputs = model(input_ids)

logits = outputs.logits

# Get logits for the last position

last_token_logits = logits[:, -1, :].squeeze(0)

# Convert logits to probabilities

probs = torch.softmax(last_token_logits, dim=-1)

# Sort the probabilities in descending order

sorted_probs, sorted_indices = torch.sort(probs, descending=True)

# Compute cumulative probabilities

cumulative_probs = torch.cumsum(sorted_probs, dim=0)

# Identify tokens to keep (those within the nucleus)

nucleus_mask = cumulative_probs <= top_p

# Always include the first token that pushes cumulative_probs over top_p

nucleus_mask[torch.sum(nucleus_mask)] = True

# Filter down to nucleus tokens

nucleus_probs = sorted_probs[nucleus_mask]

nucleus_indices = sorted_indices[nucleus_mask]

# Renormalize nucleus probabilities so they sum to 1

nucleus_probs = nucleus_probs / torch.sum(nucleus_probs)

# Sample one token from the nucleus distribution

sampled_index = torch.multinomial(nucleus_probs, num_samples=1)

next_token_id = nucleus_indices[sampled_index].item()

# Append the sampled token

generated_tokens.append(next_token_id)

# Decode the complete sequence of tokens

decoded_text = tokenizer.decode(generated_tokens)

print(”Top-p (nucleus) sampled text:”)

print(decoded_text)To summarise, Greedy decoding and Beam search are deterministic (non-random) decoding methods.

Greedy search selects the highest probability token at each step.

Beam search keeps track of multiple text sequences (according to beam width

B) and scores them at each step to maintain the top-Bcandidates overall, finally selecting the best one.

Top-K and Top-P sampling are non-deterministic decoding methods. They introduce randomness and creativity in text generation.

Top-K sampling picks the next token from a fixed number (

k) of top probable tokens.Top-P sampling picks the next token from the smallest set of tokens (called Nucleus) whose cumulative probability is greater than a threshold.