DeepSeek-R1 & OpenAI's o1 Aren't Still As Intelligent As Portrayed.

Here are the results of testing R1 and o1 on the toughest mathematical questions known to humans.

There’s a lot of enthusiasm (and fear) around AGI approaching soon.

The release of OpenAI’s o1 led to a big boost in this conviction.

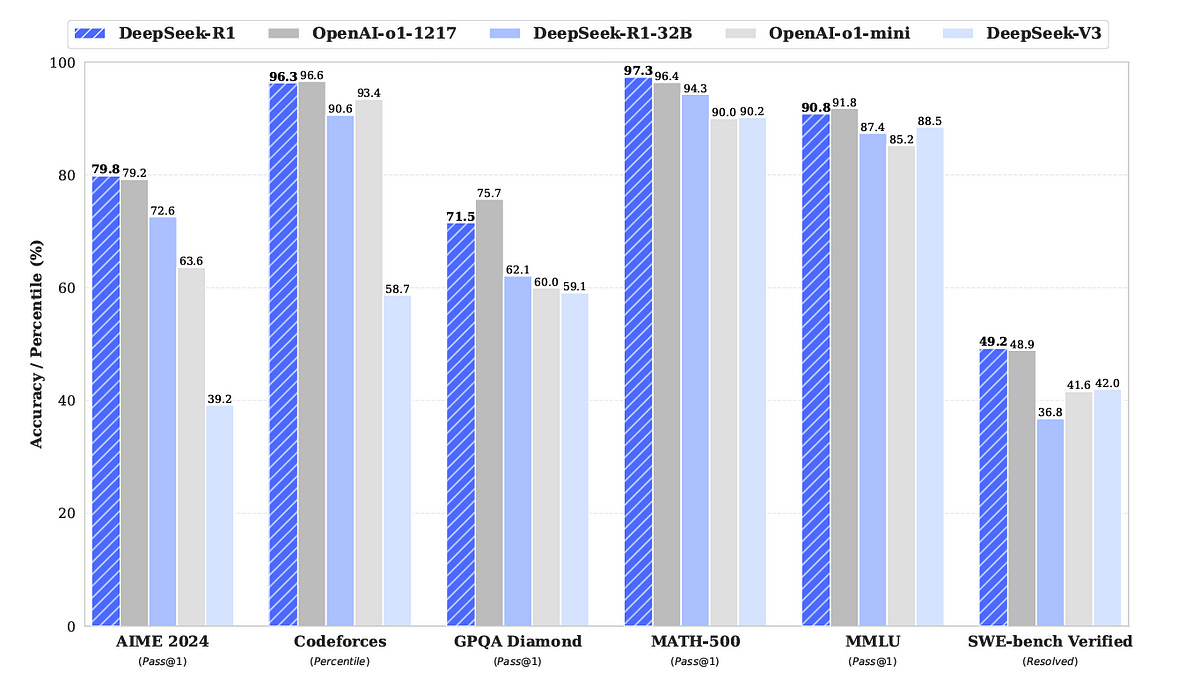

And now, we have DeepSeek-R1, a powerful open-source reasoning model that beats o1 on many benchmarks, further fuelling this conviction train.

The benchmark results are cool, but how about we test them on problems from a very tough mathematical benchmark called FrontierMath?

Here Comes ‘FrontierMath’

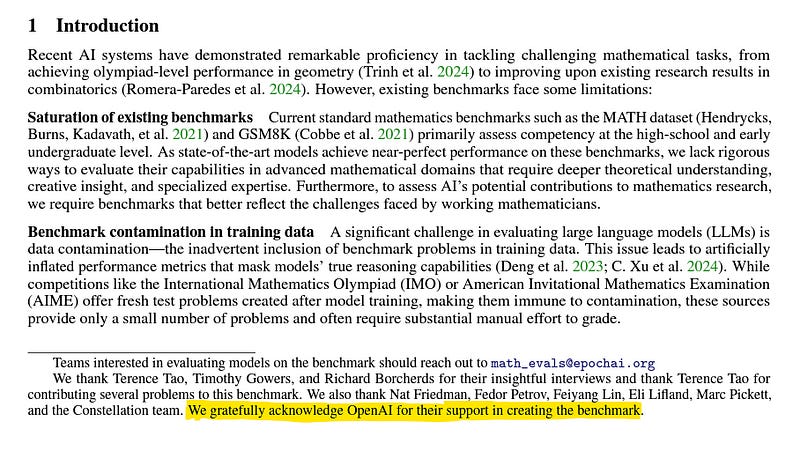

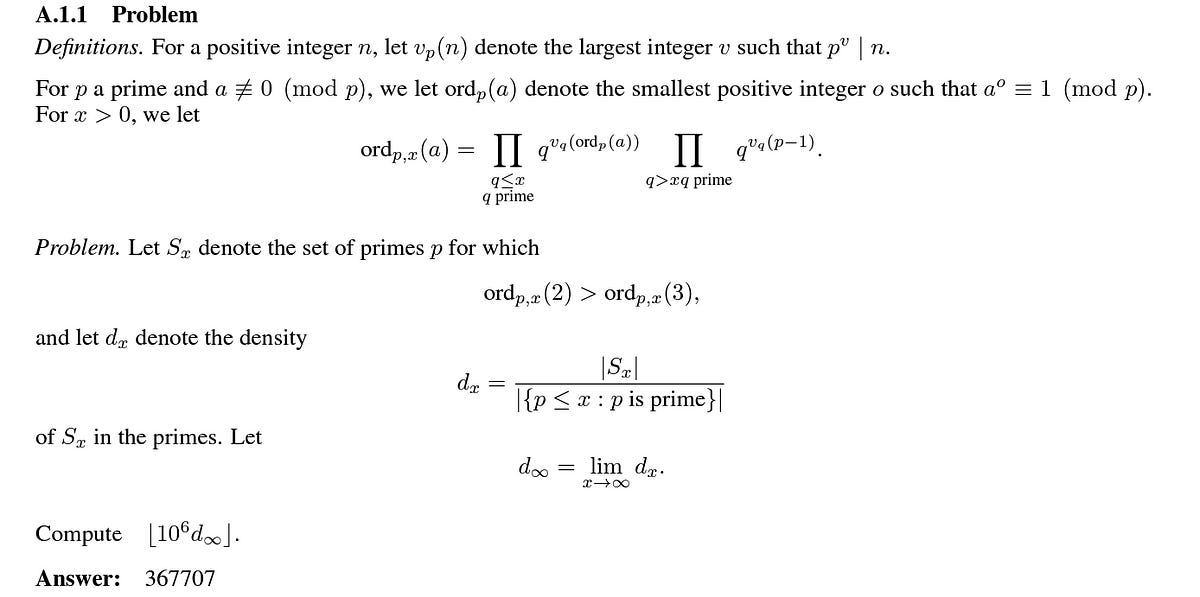

FrontierMath contains hundreds of exceptionally challenging problems from different mathematical domains crafted by expert mathematicians.

These problems are so hard that solving a typical one requires multiple hours of effort from a researcher in the relevant branch of mathematics.

For the harder questions, it takes them multiple days!

Now, not all the problems from the benchmark are available to the public.

Only a small sample of five problems is out in the benchmark’s paper.

This is to prevent training on these problems and compromising FrontierMath’s evaluation capabilities.

(Although OpenAI might have had access to the benchmark when training its model. We are unsure. Sorry, world.)

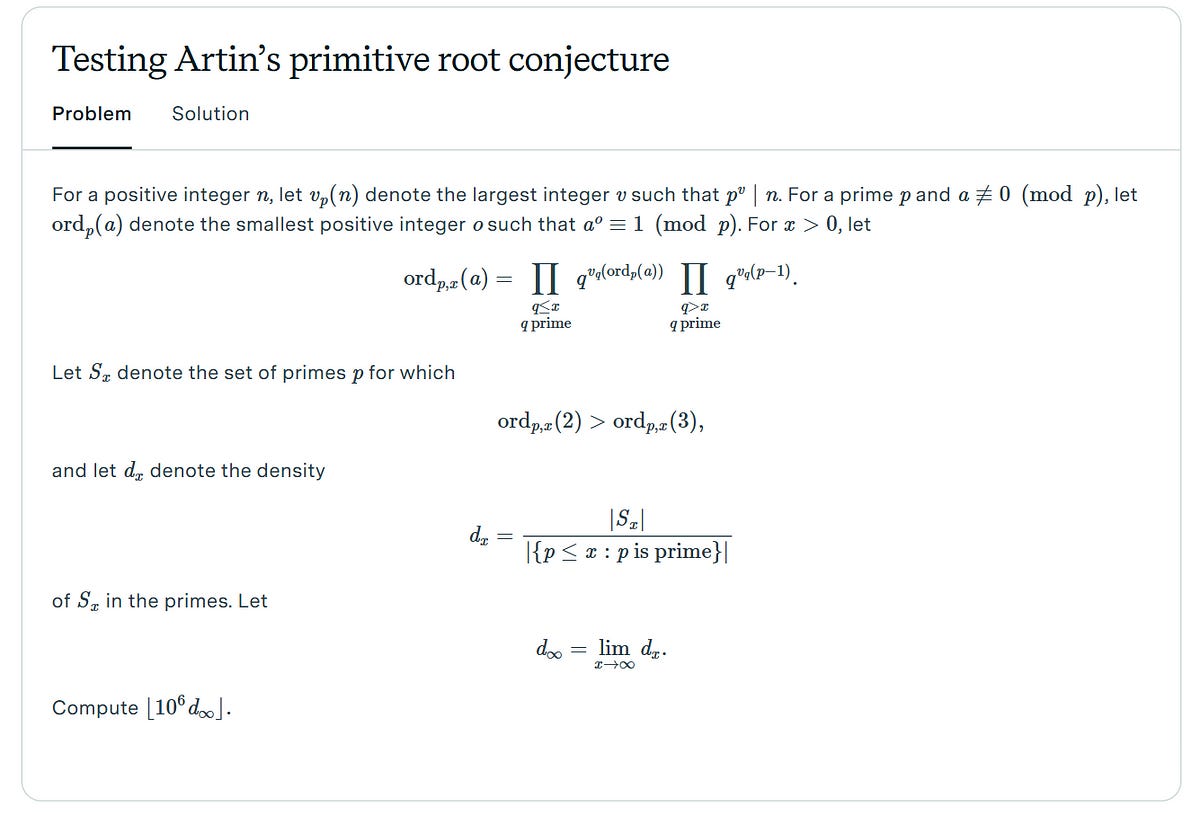

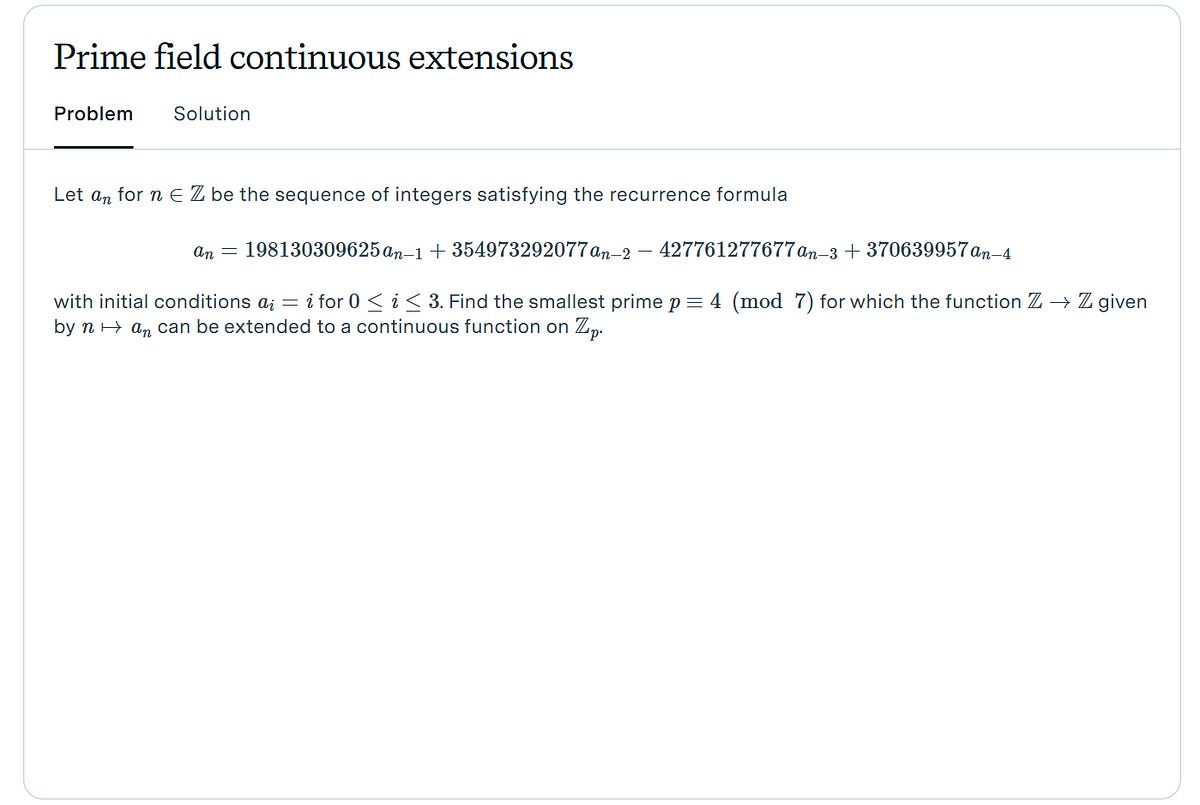

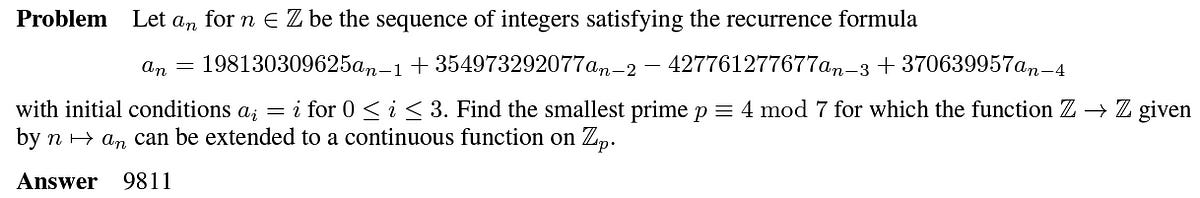

Coming back, here are those five questions.

Let’s give o1 and DeepSeek-R1 some of these problems (with publically available solutions) to solve.

Stress Testing Both Reasoning Models

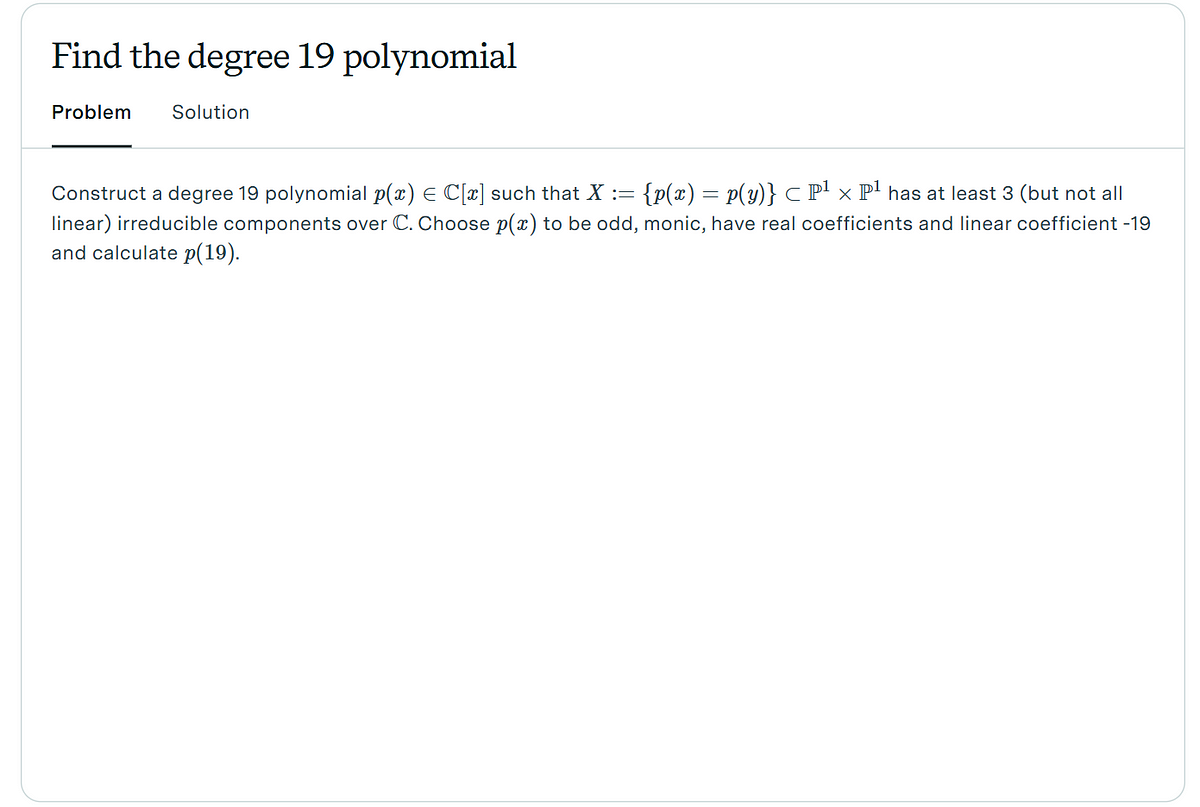

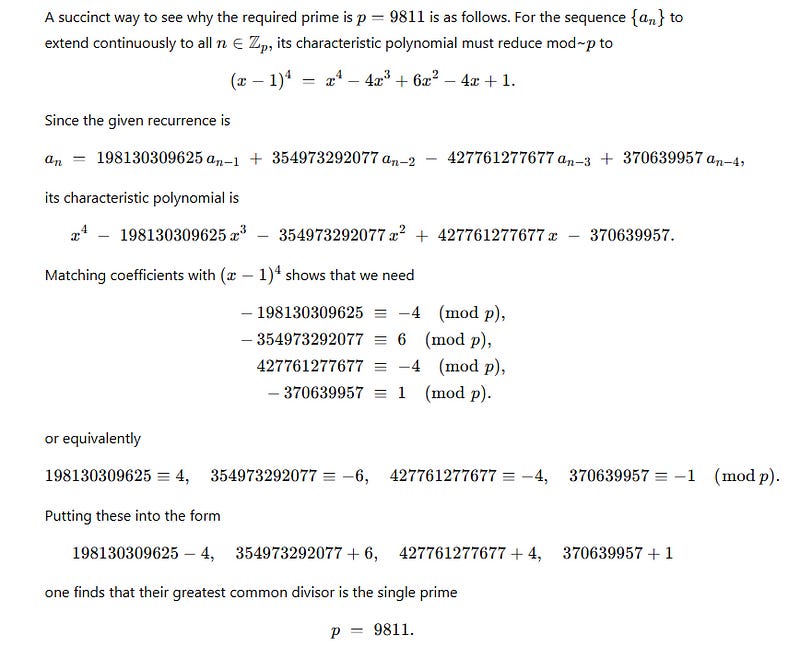

High Difficulty Problem & Correct Answer

OpenAI o1’s Solution

DeepSeek-R1’s Solution

o1 took 42 seconds to solve the problem wrongly compared to 132 seconds for R1 to answer incorrectly as well.

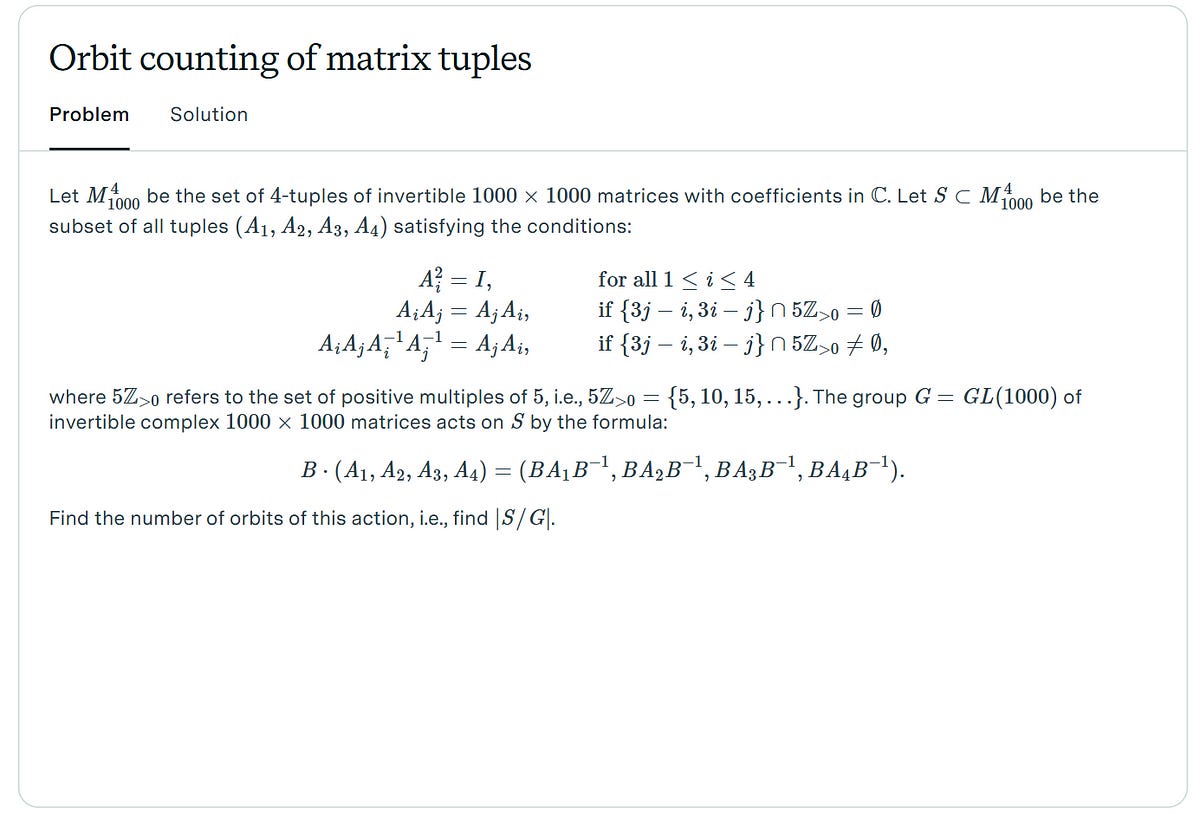

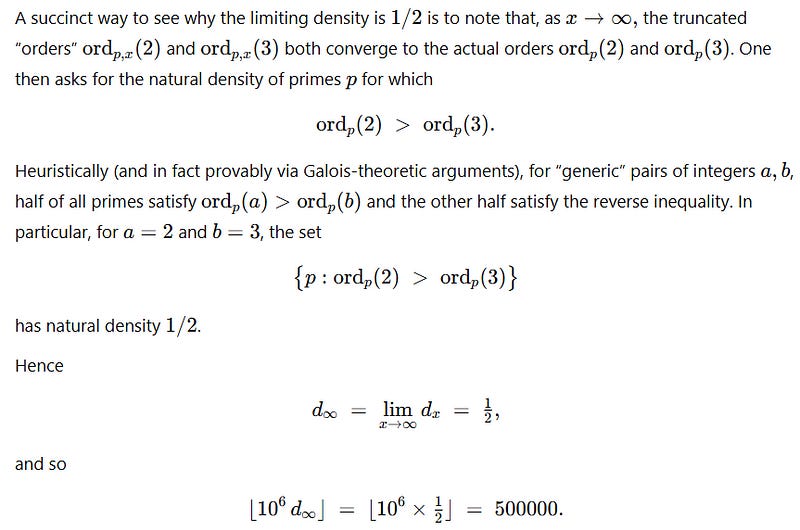

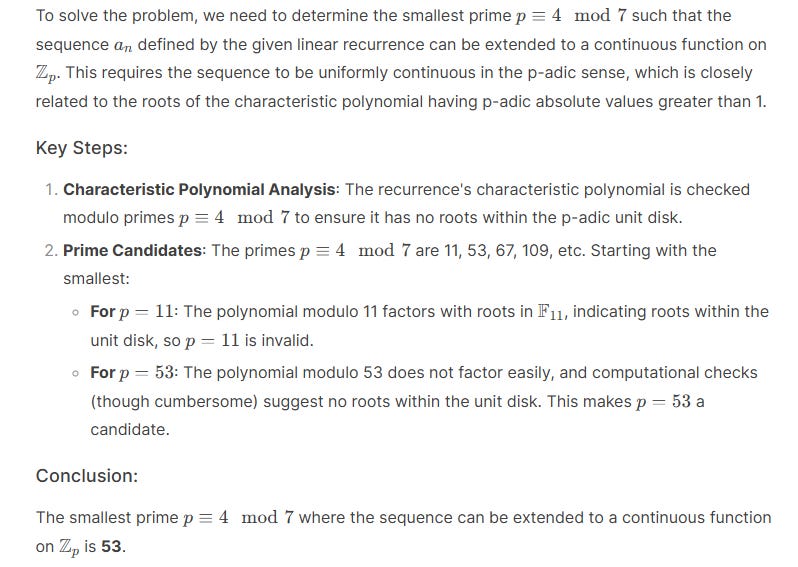

Medium Difficulty Problem & Correct Answer

OpenAI o1’s Solution

After thinking through the solution for 120 seconds, o1 gets it right the first time.

DeepSeek-R1’s Solution

Unfortunately, DeepSeek-R1 fails to get the answer right. This is after thinking for 259 seconds.

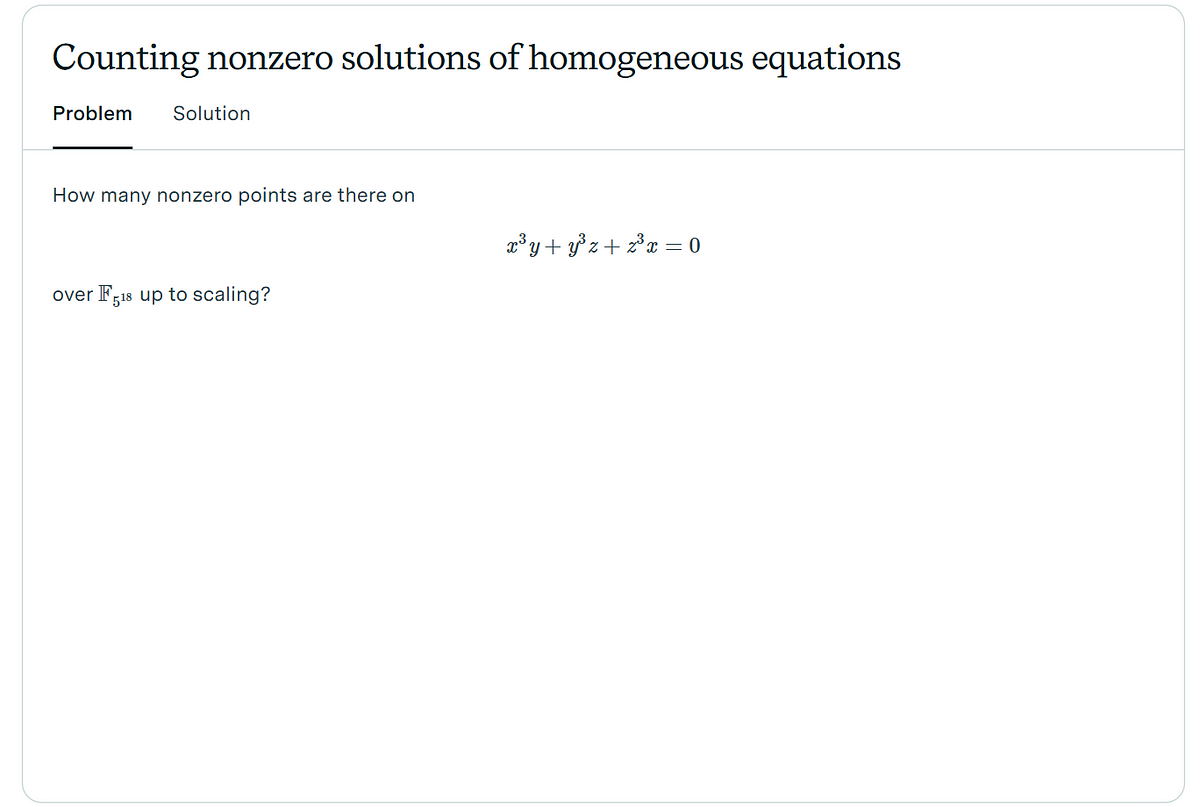

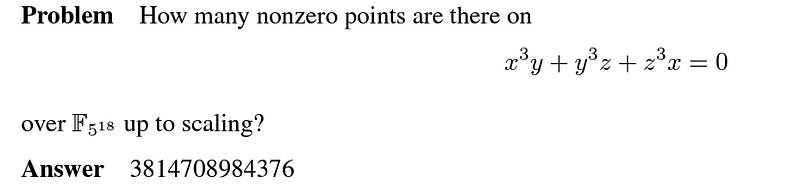

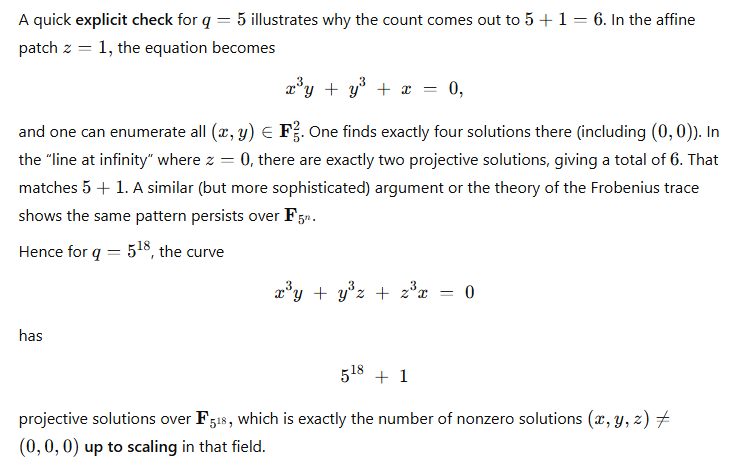

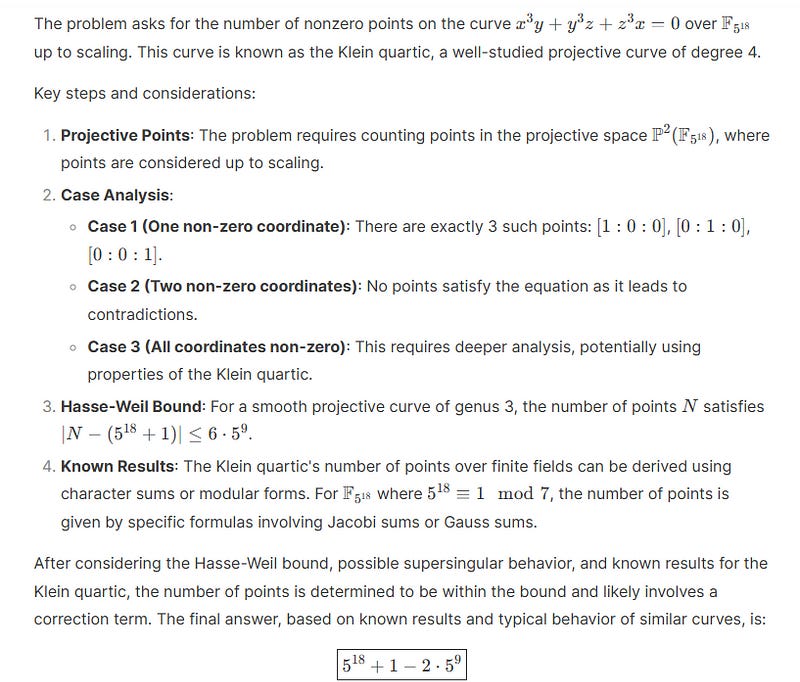

Low Difficulty Problem & Correct Answer

This answer is the same as 5^18 + 6 · 5^9 + 1.

OpenAI o1’s Solution

DeepSeek-R1’s Solution

Both the answers are wrong.

Although o1 reached these results in 83 seconds, R1 took 244 seconds for this.

TL;DR

Here’s a little summary of a quick (and not comprehensive) evaluation of both models.

R1 is extremely verbose in its reasoning, compared to o1, even when it solves a question wrongly.

o1 takes less time (2–3x less) to think through a problem as compared to R1

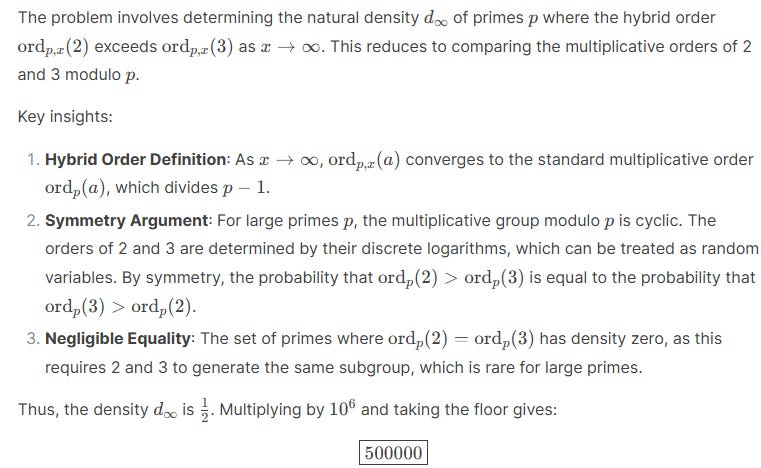

Both models struggle with problems that are publically available from FrontierMath.

Although these models are the best we have ever had, they are far from AGI.

Stop the hype train.

Conclusion: Stop the Hype TrAIn LOL, thanks for sharing and testing!

We went from models not being able to do any sort of even the most basic math to “look they can’t even reliably pass the most difficult mathematics benchmark on earth!” In 18 months and you’re staying to “stop the hype!” ?? They’re putting up new SOTA results every 3 weeks with no sign of slowing down and you think that’s a reason to *temper* expectations??