You Don’t Need Normalization In Transformers Anymore

A deep dive into the internals of Layer Normalization, and how a simple function called Dynamic Tanh (DyT) can replace them entirely in the Transformer architecture without any loss in performance.

Normalization layers are everywhere.

They are considered essential and irreplaceable, and all neural network architectures, including Transformers, use them as the default.

A group of researchers from Meta has just published new research that challenges this norm.

They introduce a simple element-wise operation called Dynamic Tanh (DyT), which can easily and entirely replace normalization layers in Transformers.

Experiments show that such replacements result in an architecture that matches (even exceeds) the performance of conventional Transformers with normalization, without requiring any hyperparameter tuning.

Similar results are observed in all kinds of experiments, ranging from recognition to generation, supervised to self-supervised learning, and computer vision to language models.

Here is a story where we take a deep dive into how Normalization works internally and how its function can be replicated and replaced by using the simple Dynamic Tanh (DyT) operation in various neural network architectures.

Let’s begin!

My latest book, called “LLMs In 100 Images”, is now out!

It is a collection of 100 easy-to-follow visuals that describe the most important concepts you need to master LLMs today.

Grab your copy today at a special early bird discount using this link.

To Start With, What Really Is Normalization?

Neural networks are notoriously hard to train.

During training, the distribution of inputs to each layer in a neural network can shift as the parameters in earlier layers change. This is called Internal covariate shift.

To fix this unwanted distribution shift, a technique called Normalization is used, which adjusts and scales the outputs (activations) of neurons in the neural network.

Take an input tensor x with shape (B,T,C) where:

Bis the batch size/ number of samplesTis the number of tokens in a sampleCis the embedding dimension

For example, a batch of 32 sentences (B), each tokenized into 128 tokens (T), and each token represented as a 768-dimensional vector (C), has a shape of (32, 128, 768).

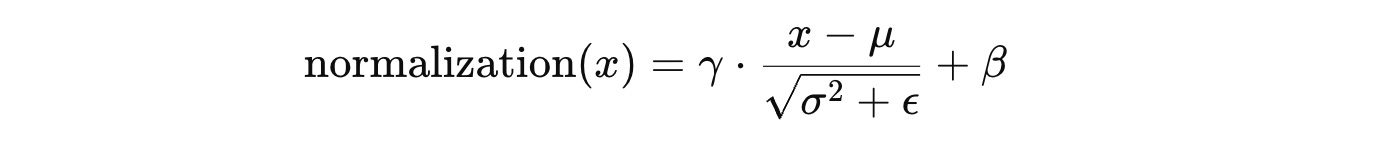

Normalization is applied to this tensor using the formula:

where:

γandβare learnable parameters of shape(C, )for scaling and shifting the output (affine transformation)ϵis a small constant added to the denominator to prevent division by zero (without it, it could lead to exploding gradients)μandσ²are the mean and variance of the input tensor. These are computed differently based on the method being used, as discussed next.

It was in 2015 when researchers at Google published a research paper on Batch Normalization.

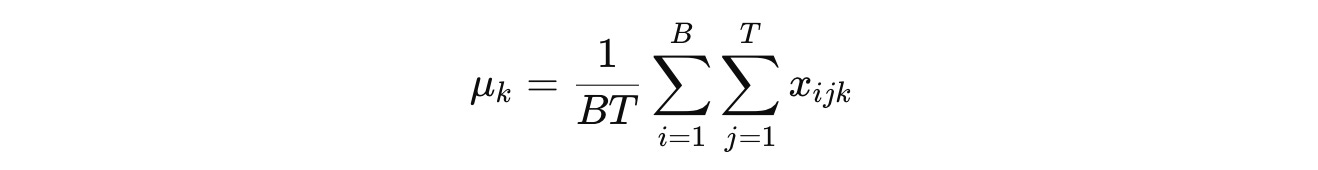

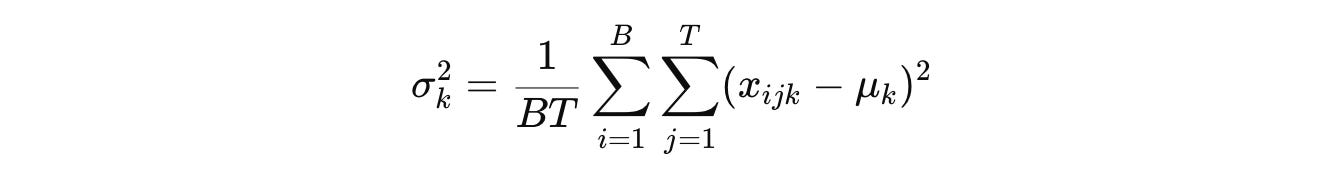

Batch Normalization (BatchNorm or simply BN), which was primarily intended to be used in CNNs, involves computing the mean μ and variance σ² for each channel C indexed by k across both the batch (B) and token dimensions (T) as:

(Note that we use the term “Channel” to refer to C when we talk about CNNs/ Vision models. It is also called “Feature dimension” in a general machine learning context and “Embedding dimension” in the context of language or sequence models.)

BatchNorm soon began to be applied in a variety of vision models and became widely successful (the paper has been awarded the ICML Test Of Time Award 2025).

Following it came many other types of normalization layers, namely:

Instance Normalization (in 2016)

Group Normalization (in 2018)

Layer Normalization (in 2016) and RMS Layer Normalization (in 2019)

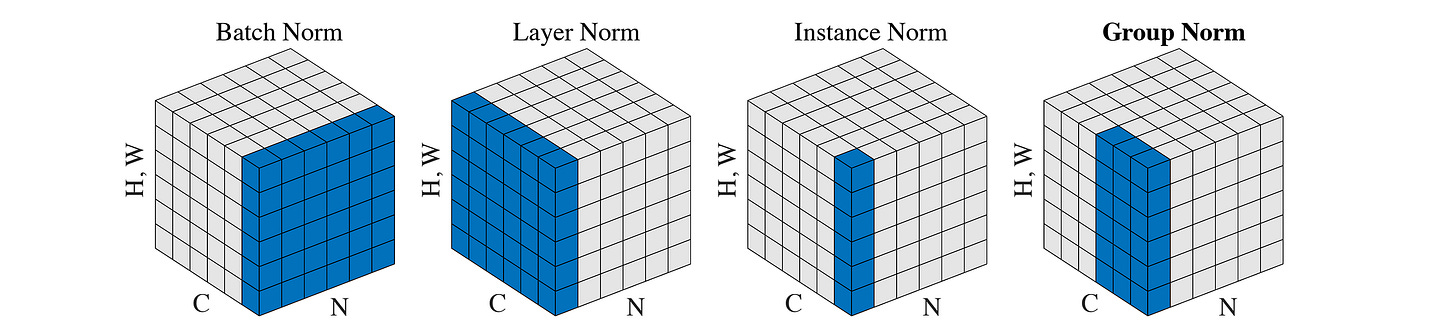

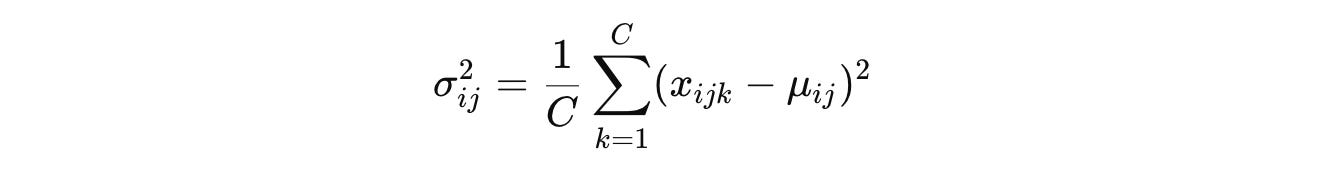

As we previously discussed, the difference between each lies in how the mean and variance are calculated over the input.

While BatchNorm calculates them across the batch (B) and token (T) dimensions for each channel (C), they are calculated in:

InstanceNorm: across tokens (

T), for each sample (B) and each channel (C)GroupNorm: across groups of channels (

C) and tokens (T), for each sample (B)LayerNorm: across all channels (

C), for each sample (B) and each token (T)

In the context of CNNs, LayerNorm computes these statistics across both channels (C) and spatial dimensions (H x W), for each sample (represented by B or N in the image), as shown below.

While GroupNorm and InstanceNorm are used to improve object detection and image stylization, LayerNorm (and its variant RMSNorm) has become the de facto layer in Transformer-based architectures.

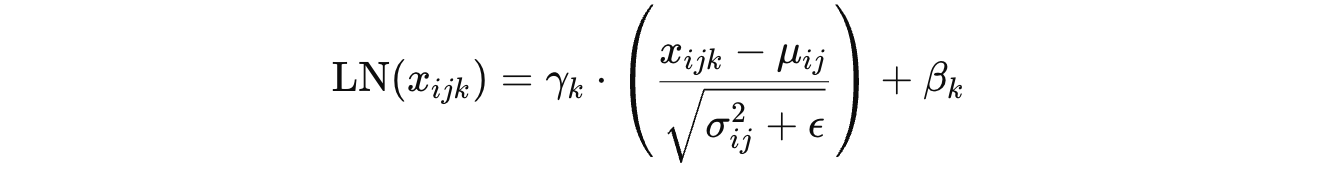

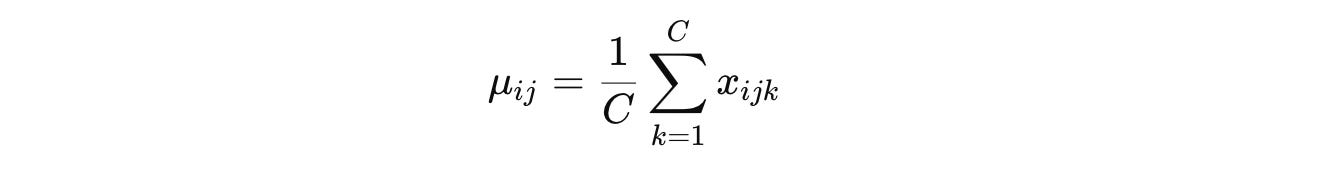

In LayerNorm, the mean (per sample and token) is calculated as:

And the variance is calculated as:

The general normalization formula that we previously discussed:

becomes the following for LayerNorm (LN):

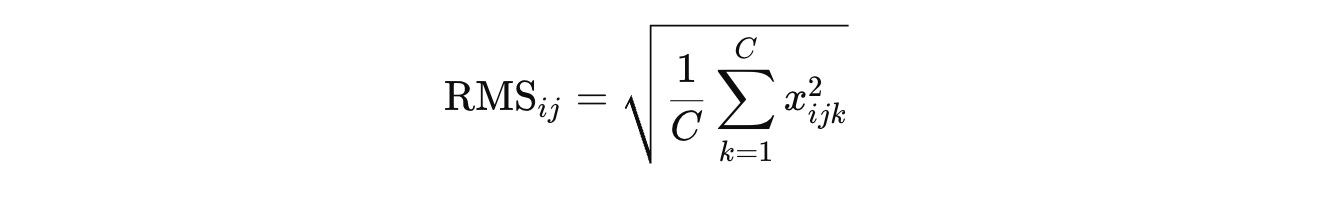

Building on this, a 2019 research paper introduced Root Mean Square Layer Normalization (RMSNorm), a computationally simpler and thus more efficient alternative to LayerNorm.

It removes the step of subtracting the mean from the input tensor (Mean centering) and normalizes the input using the RMS or Root Mean Square value as below:

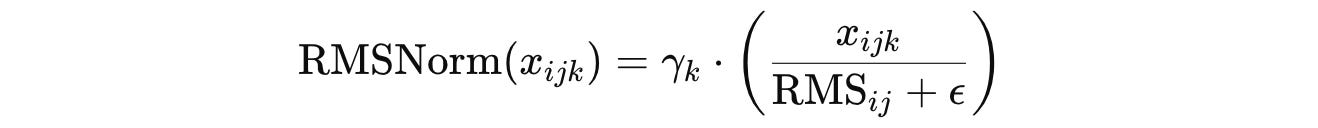

This makes the RMSNorm formula (note the emission of the affine shift β term):

RMSNorm is used today in LLaMA, Mistral, Qwen, DeepSeek, and OpenELM series of LLMs. GPT-2 on the other hand, uses LayerNorm.

It is seen that normalization layers help optimize training and enable neural networks to achieve faster convergence with better generalization.

Additionally, there has been a lot of work that replaces attention or convolution layers in deep neural network architectures (such as MLP-Mixer and Mamba), but replacing normalization layers isn’t talked about much.

A natural question arises at this point: What do Normalization layers do internally that leads to such impressive results?

Let’s talk about this next.

What Do Normalization Layers Do Internally?

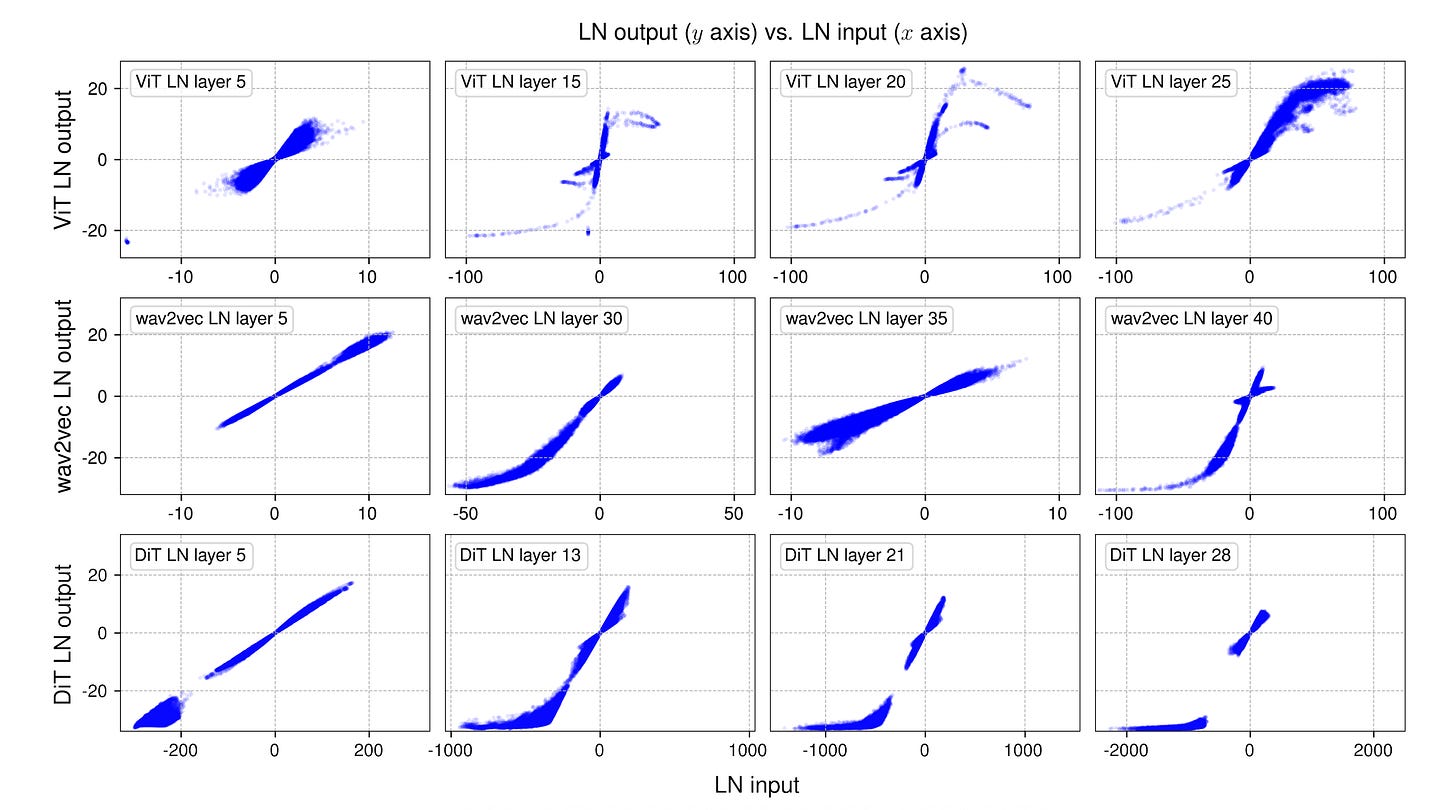

To answer this question, researchers take three different Transformer models, namely:

a Vision Transformer model (ViT-B) trained on the ImageNet-1K dataset

a wav2vec 2.0 Large Transformer model trained on the Librispeech audio dataset

a Diffusion Transformer (DiT-XL) trained on the ImageNet-1K dataset

All of the above models have LayerNorm applied in every Transformer block and before the final linear projection.

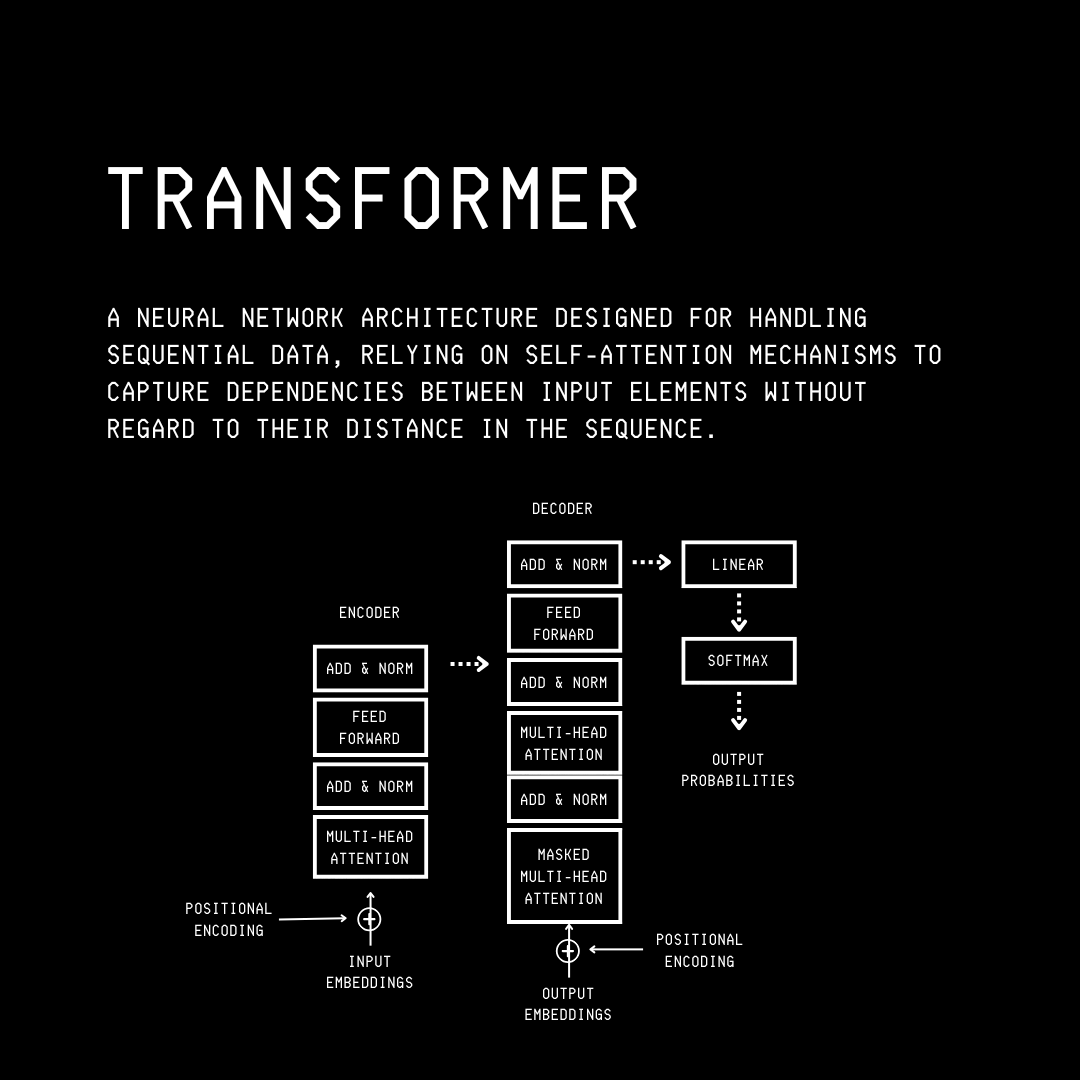

The following visualisation shows the Transformer architecture as a refresher.

A mini-batch of samples is used during the forward pass through these models.

Next, the tensor inputs and outputs (measured before the scaling and shifting operations) from normalization layers at varying depths in the network are measured and plotted to see how the normalization layer affects them.

The following is how these plots look.