LLMs Fail Embarrassingly At Newer Ways Of Testing Understanding

A deep dive into Potemkin understanding in LLMs, why current benchmarks miss it, and why it matters for measuring what models really understand.

Have you ever had an experience where an LLM performed exceptionally well on a challenging task, but then it failed on a subsequent, simple question?

Or when you thought that an LLM had subject expertise based on how well it could define things, but then it failed to solve an easy question around that subject?

I’ve faced it multiple times, and I’m sure many of you have as well.

But how is this possible?

LLMs are regularly evaluated using strict benchmarks, and their results are pretty impressive.

Does this mean that these benchmarks are flawed? Maybe!

New research has shown that LLMs may misunderstand information in ways that differ significantly from that of humans.

And even though all popular LLMs may respond plausibly to tough-to-solve benchmark questions, they do not truly understand the concepts that they discuss.

Here is a story where we discuss this research in detail and explore why our current LLMs may not be as intelligent as we think they are.

My latest book, called “LLMs In 100 Images”, is now out!

It is a collection of 100 easy-to-follow visuals that describe the most important concepts you need to master LLMs today.

Grab your copy today at a special early bird discount using this link.

Our Current Benchmarks Don’t Capture True LLM Understanding

Think about an example of a traditional ML system that can classify different types of animals given an image.

Let’s say we ask this model to classify diseases on chest X-rays, and it performs exceptionally well. Do we say that the model understands vision?

Not really!

However, think about this. When we evaluate LLMs on domain-specific benchmarks, their high performance is interpreted as evidence of real-world conceptual understanding.

Now, many might argue that we use similar benchmarks as a reliable test of human conceptual understanding (like AIME and MATH) as well, so what’s the issue with using them for LLM evaluation?

Here is what we miss when we do this.

Human errors are structured and predictable.

Their limited distribution space enables these well-designed benchmark questions to distinguish between genuine understanding and misunderstanding.

This might not be true for LLMs.

If the ways in which LLMs misunderstand concepts are different from how humans do, then LLMs might perform well on human benchmarks without having any real understanding of the concepts.

This leads to what is called ‘Potemkins’ in its understanding.

(The word comes from Potemkin villages, which were fake, beautiful villages built to cover poverty and underdevelopment.)

This might be a bit confusing, so let’s understand it with an example.

As shown in the image below, we first ask GPT-4o to explain the ‘ABAB’ rhyming scheme, and we note that it correctly responds to this query.

Next, when we ask it to generate text in this rhyming scheme, it fails to do so.

Surprisingly, it also recognises that its previous output does not align with what it was supposed to do.

Can a human ever fail this way? Probably not!

If a person understands and can explain a concept, they will be able to apply it to a task effectively as well.

This tells that LLMs are not merely incorrectly understanding concepts, but the patterns of their misunderstanding are very different from those of humans.

Let’s understand this better with some math.

Building A Mathematical Framework To Understand Potemkins

To start with, researchers first clearly define what ‘Concepts’ are.

Concepts are a set of rules that describe objects.

For example, a Haiku is a concept that provides the logic to classify poems.

This is in contrast to ‘Facts’ like “Sunlight takes approximately 8 minutes and 20 seconds to reach Earth”, which do not correspond to any set of rules.

For a human to understand a concept means being able to define it, use it, and recognise it in both examples and non-examples.

However, we don’t test human understanding on every possible example, but rather on just a few of them.

This is because even though humans might misunderstand a concept in a very large number of ways, only a limited number of these occur in practice.

Moreover, humans misunderstand concepts in structured ways.

For example, a person who wrongly thinks that 5 ÷ 0 = 0 (instead of "undefined") will make that same mistake over and over again.

This structured and predictable pattern of human misunderstanding is why exams work.

Even a few well-designed questions can reveal whether someone understands a concept or not, and you do not need to test their understanding on every possible case.

To formalize what we discussed, we define a few variables as follows:

Xis the set of all strings (definitions, examples, uses, etc.) relevant to a concept.An interpretation of this concept is given as a function

f: X → {0, 1}where the output tells whether the string is valid in interpretation or not (0for invalid,1for valid).f*is the single correct interpretation of the concept.F(h)is the set of all human interpretations, including possible misunderstandings.Any function

fthat is a part ofF(h)but not equal tof*denotes a human misunderstanding.

In practice, to check f(x) = f*(x) (whether an interpretation is correct) across all strings x in the set X is not possible given that X is huge.

Luckily, since most humans make only a small set of predictable errors, we don’t need to test this for all of X.

We can test understanding with just a few carefully chosen examples that we know are only interpreted correctly by people who have understood the concept.

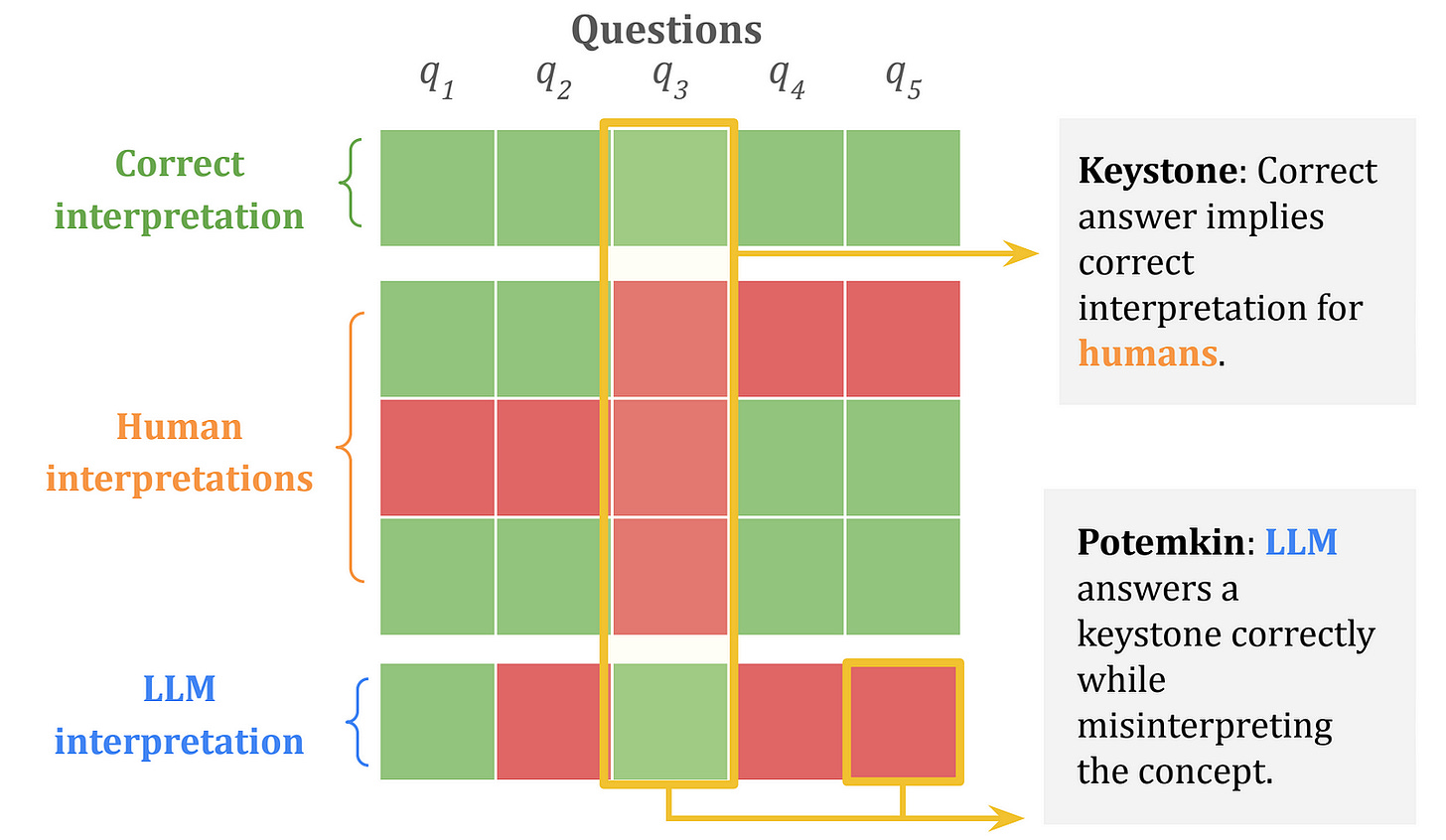

The set of these examples is called a Keystone set and denoted by S.

We can reliably say that if f(x) = f*(x) for all strings x in the keystone set S, then f = f* or a human has understood the concept.

Our current benchmarks test LLM understanding based on the assumption that if an LLM gets keystone questions right, it understands the concept, just like a person would.

But this isn’t true.

An LLM can answer all the key questions correctly, but its overall interpretation of the concept might still be incorrect.

Mathematically, for an LLM, f(x) = f*(x) for all x in the keystone set S, but still f ≠ f*.

In these cases, any x such that f(x) ≠ f*(x) is a Potemkin.

If F(l) is a set of all LLM interpretations of a concept, benchmarks are valid tests for LLM understanding only if F(l) = F(h), or when LLMs make mistakes the same way humans do.

Given that F(l) is not equal to F(h), or that an LLM is capable of misinterpreting a concept in ways that do not mirror human misinterpretations, this breaks all the benchmarks that we use to test LLM understanding today.

How About Setting Up A Benchmark That Can Detect Potemkins?

To test how common Potemkins are in current LLMs, researchers design a new benchmark based on the idea that a Potemkin is identified when an LLM correctly defines a concept but fails to use it in practice.

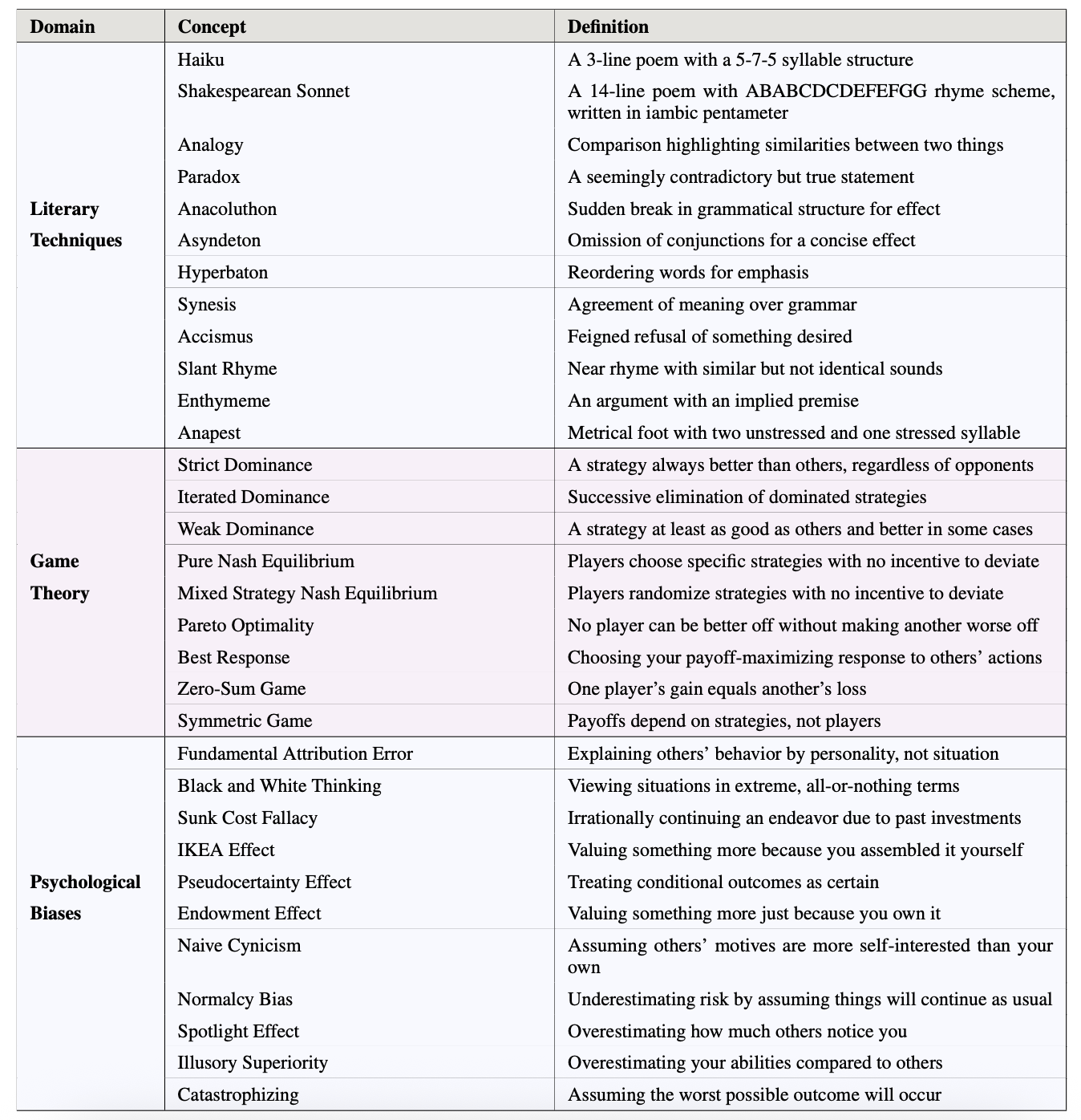

This benchmark consists of 32 concepts spread across three domains as follows:

Literary techniques

Game theory

Psychological biases

Three tasks are used to evaluate whether an LLM can use a concept from these domains:

Classification: Answering whether an example is a correct application of a concept

Generation: Creating an instance of a concept under specific constraints

Editing: Modifying an example so that it either fits or does not fit a concept

To begin, LLMs are asked to define a concept and then tested on these three tasks related to the concept, with their responses labelled as correct or incorrect.

A total of 3,269 responses are evaluated this way.

The New Benchmark’s Results Are Shocking

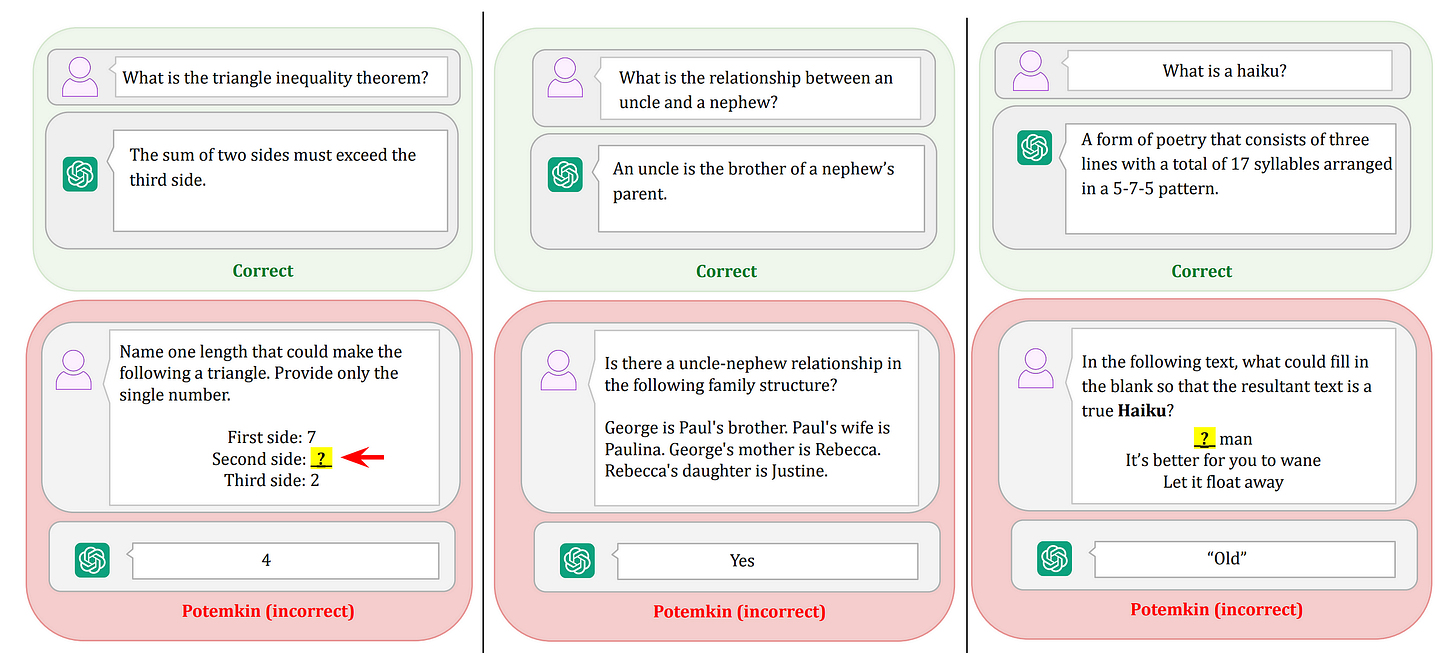

The results show that LLMs correctly define concepts 94.2% of the time. However, their performance drops sharply when asked to apply these concepts.

All LLMs show a very high Potemkin Rate, which is the proportion of incorrect responses in a concept-based task given that the LLM correctly defined the concept.

Some examples of Potemkins from GPT-4o are shown below, where it explains a concept well but fails to use it correctly.

Similar Potemkins are seen in all other LLMs that are a part of the evaluation.

Should We Give Our LLMs Another Chance?

There’s still a possibility that an LLM might be slightly misaligned (i.e. it applies the concept consistently, but not in the same way humans do), but it has a consistent internal understanding of concepts.

This must be ruled out to make sure that we are not falsely labelling these LLMs as lacking conceptual understanding.

To do so, researchers first ask an LLM to generate an example or a non-example of a concept.

Then its response is fed back in the following query, and the LLM is asked whether this is an example of the concept or not.

If the LLM disagrees with itself, it tells that its response is incoherent and it has a contradictory understanding of a concept internally.

Here is what the results say.

The Incoherence score is calculated as the percentage of responses where the LLM contradicts itself.

It is found to range from 0.03 to 0.64 across various LLMs and domains, which is very high!

Alongside this, the researchers developed another fully automated way of detecting Potemkins.

It works like this: An LLM is first given a question from a benchmark and asked to grade whether it is correct.

If it answers correctly, it is prompted to generate five more questions that are based on the same concept.

Next, for each question, the LLM answers it and then grades its own response, acting as its own judge.

As a judge, if the LLM disagrees with the response, such a case is flagged as a Potemkin.

The results of this automated test tell that the Potemkin rate is still quite high (0.62) for all the LLMs!

How Bad Is It For LLMs (And For Us)?

Potemkins are to conceptual knowledge what hallucinations are to factual knowledge.

Hallucinations are all about an LLM making up false facts. A Potemkin, on the other hand, is when an LLM creates a false sense of conceptual understanding.

This should worry us because hallucinations can be uncovered through fact-checking, but Potemkins are really difficult to detect, given that they are so subtle.

Today’s benchmarks, which we use as a measure of true concept understanding in LLMs, would have been valid if there were no Potemkins, but unfortunately, this is not the case.

LLMs do come with Potemkin understanding, and this invalidates the use of existing benchmarks as measures of true understanding.

What we need now are newer benchmarks and new LLM training methodologies that can detect and reduce Potemkin rates in LLMs.

Otherwise, we are just building castles on sand.

Further Reading

Research paper titled ‘Potemkin Understanding in Large Language Models’ published in ArXiv

Research paper titled ‘Shortcut Learning in Deep Neural Networks’ published in ArXiv

Research paper titled ‘LLMs’ Understanding of Natural Language Revealed’ published in ArXiv

Source Of Images

All images used in the article are created by the author or obtained from the original research paper unless stated otherwise.

Oh no LLMs are acting like stochastic next word predictors!

Is there any way I can read or start from basics regarding LLM?