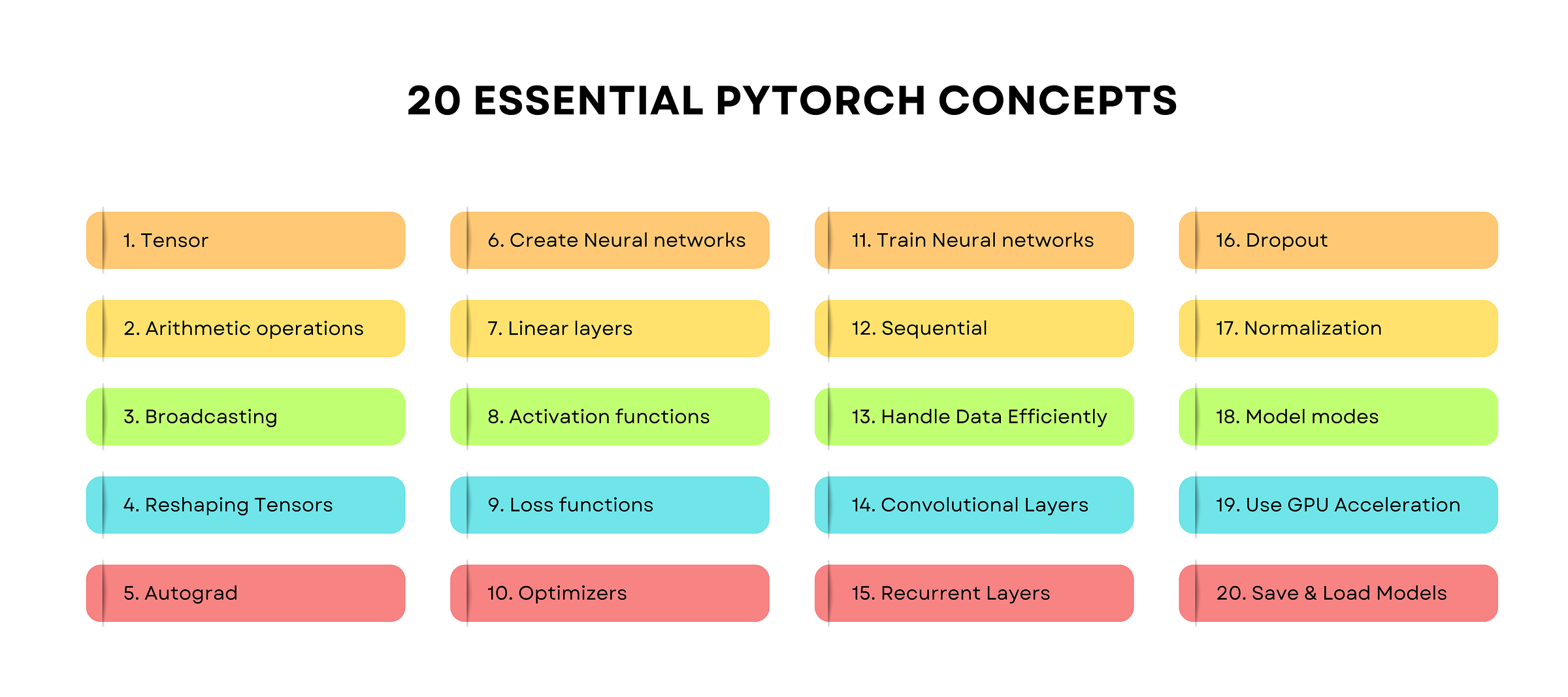

20 PyTorch Concepts, Explained Simply

Understand PyTorch well and supercharge your AI engineering skills today.

🎁 Become a paid subscriber to ‘Into AI’ today at a special 25% discount on the annual subscription.

PyTorch is one of the most important and most popular deep learning frameworks today.

It was built on top of the Lua-based Torch library at Meta and open-sourced in 2017.

Since its release, the library has been used to build almost every important modern AI innovation, from Tesla’s self-driving cars to OpenAI’s ChatGPT.

Let’s learn the 20 most important concepts to improve our understanding of PyTorch from the ground up.

Before we start, I want to introduce you to my book ‘LLMs In 100 Images’.

It is a collection of 100 easy-to-follow visuals that explain the most important concepts you need to master to understand LLMs today.

Grab your copy today at a special early bird discount using this link.

1. Tensor

A Tensor is the core data structure and computational unit of PyTorch.

PyTorch tensors are multi-dimensional arrays that contain values of the same data type. They can be thought of as a NumPy array, but highly optimized and GPU-compatible.

A Tensor is created from a Python list as follows:

import torch

# Create tensor from a list

x = tensor.tensor([1,2,3])

print(x)

#tensor([1, 2, 3])An uninitialized Tensor is created using

torch.empty(shape)

# Create a 3x2 tensor with uninitialized values

x = torch.empty(3,2)Tensors initialized with zeros or ones are created using

torch.zeros(shape)ortorch.ones(shape)

# Create a 3x2 tensor with zeros

x = torch.zeros(3, 2)

print(x)

“”“

tensor([[0., 0.],

[0., 0.],

[0., 0.]])

“”“# Create a 3x2 tensor with ones

x = torch.ones(3, 2)

print(x)

“”“

tensor([[1., 1.],

[1., 1.],

[1., 1.]])

“”“Tensors initialized with random values from a uniform distribution on the interval of

0to1(not including1) are created usingtorch.rand(shape)

# Create a 2x2 tensor with random values from the uniform distribution

x = torch.rand(2,2)

print(x)

“”“

tensor([[0.6051, 0.0569],

[0.7959, 0.0452]])

“”“Tensors initialized with random numbers from a normal distribution with mean

0and variance1are created usingtorch.randn(shape)

# Create a 2x2 tensor with random values from the standard normal distribution

x = torch.randn(2,2)

print(x)

“”“

tensor([[ 0.7346, -0.3198],

[ 0.9044, 0.0995]])

“”“torch.arange(start, end, step)is used to create a 1-D tensor with evenly spaced values within a given interval

# Create a 1-D tensor

x = torch.arange(0, 10, 2)

print(x)

# tensor([0, 2, 4, 6, 8])2. Arithmetic operations on Tensors

Element-wise arithmetic operations on tensors are performed as follows.

x = torch.tensor([3, 2, 1])

y = torch.tensor([1, 2, 3])

# Element-wise arithmetic operations

add = x + y # Addition

subtract = x - y # Subtraction

multiply = x * y # Multiplication

divide = x / y # DivisionAll of the above operations create new tensors.

In-place operations (that modify the original tensor) are performed using the following methods with the _ suffix, as shown below.

# In-place operations (modifies x)

x = torch.tensor([10, 20, 30], dtype=torch.float32)

x.add_(2)

x.sub_(3)

x.mul_(5)

x.div_(2) 3. Broadcasting

Broadcasting allows PyTorch to perform operations on tensors of different shapes.

This is done by automatically expanding the smaller tensor to match the larger one, without actually copying/ duplicating its data in memory.

# Without broadcasting (manual)

a = torch.tensor([1, 2, 3])

b = torch.tensor([10])

b_repeated = b.repeat(3) # [10, 10, 10]

result = a + b_repeated # [11, 12, 13]

# With broadcasting (automatic)

a = torch.tensor([1, 2, 3])

b = torch.tensor([10])

result = a + b # [11, 12, 13] 4. Reshaping Tensors

reshape and view are two important methods used to reshape tensors in PyTorch.

viewreturns a reshaped tensor but requires the original tensor to be contiguous in memory.

# Create tensor

x = torch.randn(2, 3, 4)

# Check if the tensor is contiguous in memory

print(x.is_contiguous()) # True

# Reshape using view

y = x.view(6, 4)# Transpose the tensor, which returns a non-contiguous tensor

x_t = x.transpose(1, 2)

print(x_t.is_contiguous()) # False

# Reshape using view

y_2 = x_t.view(6, 4) #Throws an errorreshapereturns a reshaped tensor even if the original tensor is non-contiguous in memory

# Reshape using ‘reshape’

y_2 = x_t.reshape(6, 4) Apart from these, there are other methods that you will commonly encounter being used in PyTorch code. These are as follows:

unsqueezeandsqueezeare used to add or remove a dimension of size1to a tensor, respectively.

# Create tensor of shape (3, 4)

x = torch.randn(3, 4)

# Add a new dimension at the front (dim=0)

x_unsq0 = x.unsqueeze(0)

# shape: (1, 3, 4)

# Add a new dimension in the middle (dim=1)

x_unsq1 = x.unsqueeze(1)

# shape: (3, 1, 4)

# Add a new dimension at the end (dim=2)

x_unsq2 = x.unsqueeze(2)

# shape: (3, 4, 1)# Create a tensor

x = torch.randn(1, 3, 1, 4, 1)

# Remove all size-1 dimensions

x_sq_all = x.squeeze()

# shape: (3, 4)

# Remove only a specific dimension of size 1

x_sq0 = x.squeeze(0) # remove dim 0 → shape: (3, 1, 4, 1)

x_sq2 = x.squeeze(2) # remove dim 2 → shape: (1, 3, 4, 1)flattenis used to turn a tensor or its selected dimensions into 1-dimension, whileunflattensplits a dimension into multiple dimensions.

# Create a tensor

x = torch.randn(2, 3, 4)

# Flatten into 1-D

y = x.flatten()

# shape: (24,)

# Flatten into 1-D starting from dim 1

y = x.flatten(start_dim=1) # shape: (2, 12)# Create a 1-D tensor

x = torch.arange(24)

# shape: (24,)

# Unflatten the first dimension into (2, 3, 4)

y = torch.unflatten(x, dim=0, sizes=(2, 3, 4))

# shape: (2, 3, 4)transposeswaps two specified dimensions of a tensor whilepermutereorders all dimensions of a tensor in any order.

# Create a tensor

x = torch.randn(2, 3, 4) # shape: (2, 3, 4)

# Transpose dim 1 and dim 2

y = x.transpose(1, 2) # shape: (2, 4, 3)# Create a 2D tensor

m = torch.randn(3, 4) # shape: (3, 4)

# Shorthand for transpose applicable only to 2D tensors

m_t = m.t() # shape: (4, 3)# Use ‘permute’ to reorder all dims as required

y = x.permute(2, 0, 1) # shape: (4, 2, 3)expandbroadcasts a tensor to a larger size without copying data, whilerepeatdoes so by copying the values.

# Create a tensor

x = torch.randn(1, 3)

y = x.expand(4, 3) # # x’s single row is broadcasted 4 times

# shape: (4, 3)

#’repeat’ works by repeating each dimension by the given factor

y = x.repeat(4, 1) # x’s dim 0 is duplicated 4 times in memory, dim 1 is duplicated once

# shape: (4, 3)stackcombines tensors by creating a new dimension, whilecatjoins or concatenates tensors along an existing dimension.

# Create tensors

a = torch.tensor([1, 2, 3])

b = torch.tensor([4, 5, 6])

# Stack along a new dimension 0

out = torch.stack([a, b], dim=0)

print(out.shape)

# torch.Size([2, 3])# Concatenate along dimension 0

out = torch.cat([a, b], dim=0)

print(out.shape)

# torch.Size([6])5. Autograd

Autograd is PyTorch’s automatic differentiation engine. It is used to automatically compute gradients of tensors with respect to other tensors.

This is an important operation in training deep learning neural networks, which involves computing the gradients of a loss function with respect to the model parameters.

To track operations on a tensor for gradient computation, we set

requires_grad=True.

PyTorch will track all operations on x as we set requires_grad=True in the example given below.

# Create a tensor that requires gradients

x = torch.tensor(2.0, requires_grad=True)Operations on a tensor with requires_grad=True are recorded on a dynamic computational graph, which is a directed acyclic graph (DAG).

Shown in the example below, PyTorch builds a computational graph for the following function behind the scenes.

# Define a function (y = x^2 + 2x + 2)

y = x**2 + 2*x + 2