Superfast Matrix-Multiplication-Free LLMs Are Finally Here

A deep dive into Matrix-Multiplication-Free LLMs that might drastically decrease the use of GPUs in AI, unlike today

A recent research article published in ArXiv has proposed massive changes in LLMs as we know them today.

The researchers involved in the project eliminated Matrix Multiplication (MatMul), a core mathematical operation performed in LLMs.

They showed how their new MatMul-free LLMs can perform strongly even at billion-parameter scales and how they can even beat the performance of traditional LLMs on certain tasks!

This is huge and has been made possible just because of this single optimisation!

This is because the MatMul operation, although extremely important for LLMs, is highly computationally expensive. It is what that makes today’s LLMs highly reliant on Graphics Processing Units (GPUs) for their training and inference.

But this might not be true anymore!

Here’s a story where we deep dive into how these new MatMul-free LLMs were made possible and how they positively influence the future of AI.

But First, What Even Is Matrix Multiplication?

Matrix Multiplication is an algebraic operation where two matrices are multiplied together to produce a third matrix under the condition that the number of columns in the first matrix must be equal to the number of rows in the second matrix.

How Do LLMs Use Matrix Multiplication

Matrix Multiplication (MatMul) is a core mathematical operation performed in LLMs.

Firstly, MatMul is used to generate Token and Positional Embeddings from input text.

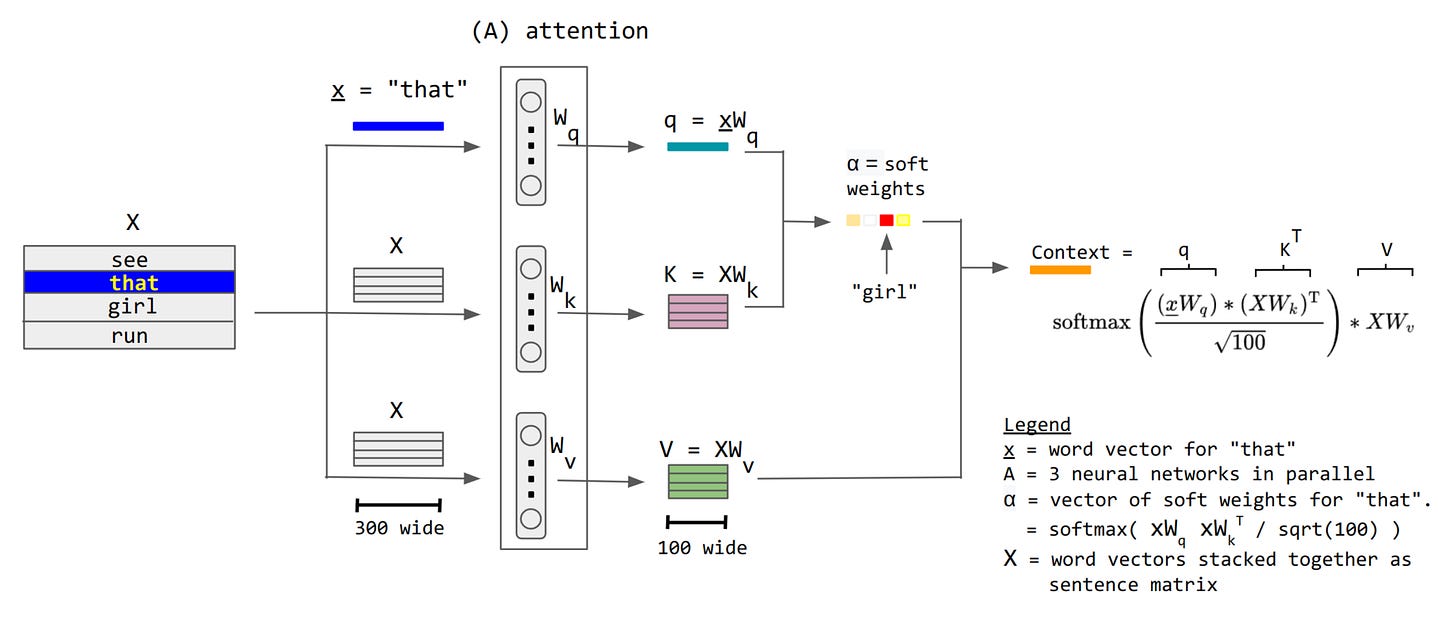

Then, the Self-attention mechanism in the Transformer within an LLM uses MatMul to compute the attention score matrix using the Query (Q), Key (K) and Value (V) matrices.

These Q, K and V matrices, in turn, are obtained using MatMul operations over the input and learned weight matrices.

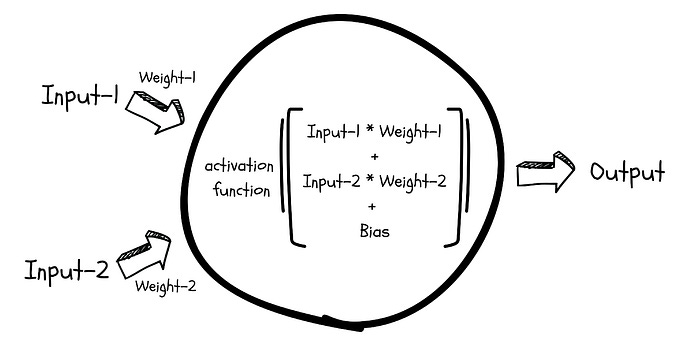

Next, MatMul is applied to inputs, weights, and biases (linear transformation followed by non-linear activation functions), resulting in the output for the dense feedforward networks within an LLM.

Finally, the output layer of an LLM uses this operation to generate the final predictions.

How Is Matrix Multiplication Optimized Today

MatMul operations are made efficient using CUDA and libraries like cuBLAS that parallelize and accelerate them using powerful GPUs.

However, they still account for the largest proportion of computational expense during LLMs' training and inference phases.

To date, much effort has been made to replace these operations (with AdderNet, Spiking Neural Nets, Binarized Nets, and BitNet), but all these approaches have failed when used at scale.

But not anymore!

But, before we move forward to understand these MatMul-free LLMs, we need to learn more about the components that make traditional LLMs.

Understanding The Components of Traditional LLMs

The Transformer architecture in traditional LLMs has two essential components that help it understand sequential information.

Token Mixer

This component handles the relationships between different tokens in a sequence.

Token mixing in traditional LLMs is achieved with Self-attention mechanisms.

Channel Mixer

This component handles integrating information across different channels or feature dimensions of the input representations.

Channel Mixing is achieved using Position-wise Feedforward Networks that refine and integrate the features extracted in the token mixing step.

Both of these steps can be seen below in the Transformer architecture.

Now that we know them let’s move on to the real deal!

How Is MatMul Eliminated In The New LLM Architecture

The MatMul-free LLM was constructed after three key modifications to the traditional LLM architecture.

MatMul-free Dense Layers

MatMul-free Token Mixture or Self-attention

MatMul-free Channel Mixer

Let’s explore each of these in detail.

1. MatMul-free Dense Layers

Inspired by BitNet, the dense layers were first replaced with BitLinear modules.

These use Ternary weights or weights in the weight matrix that can only have three possible values — -1, 0 and +1.

This constraint is called Ternany Quantization and replaces the multiplication operation in MatMul with simple addition or subtraction operations.

Hardware-efficient Fused BitLinear Layer

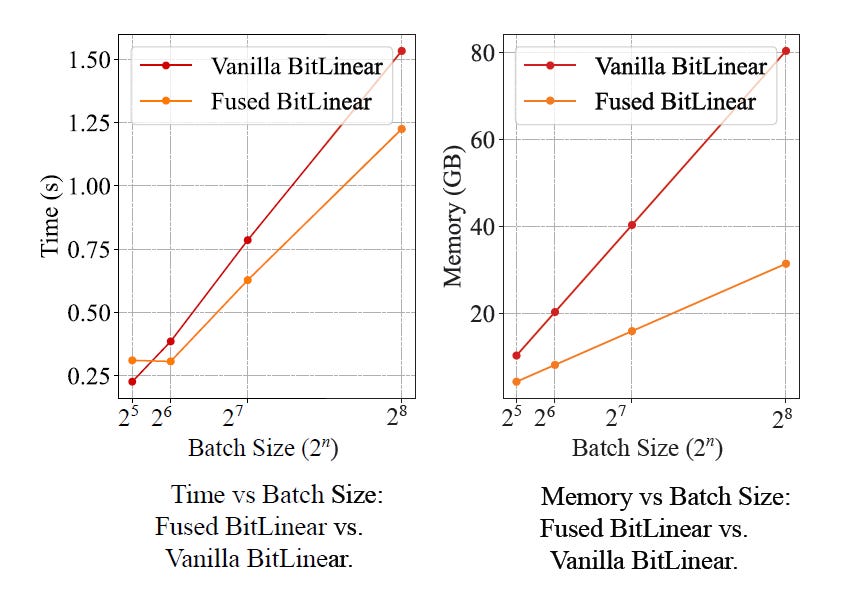

The original BitLinear layer in BitNet applies the RMSNorm activation before the BitLinear input.

However, this implementation was found to be inefficient as it introduced many I/O operations between the different types of memory in GPUs (HBM and SRAM).

Thus, the researchers introduced a new hardware-efficient Fused BitLinear Layer in which the RMSNorm activation and quantization steps were fused into a single operation in SRAM instead of being performed separately using multiple memory operations.

2. MatMul-free Token Mixer

The Self-attention token mixer, which involves MatMul between Query (Q), Key (K) and Value (V) matrices, was replaced by a modified Gated Recurrent Unit (GRU) architecture.

The following changes lead to this modified GRU architecture —

Hidden-state-related weights and

tanhactivations between these hidden states were removedCandidate hidden state computation uncoupled from the hidden state and was simplified to a linear transformation of the input

A data-dependant output gate was added between the hidden state and the output (as inspired by the LSTM architecture)

All weights were replaced with Ternary weights with just three possible values of

-1,0and+1

3. MatMul-free Channel Mixer

Instead of Feed-Forward Networks, Gated Linear Units (GLU) were used as the Channel Mixer in the MatMul-free architecture.

Ternary weights were again used with GLUs to replace matrix multiplications with simpler addition and subtraction operations.

How Well Did The MatMul Free LLMs Perform

The MatMul-free LM models displayed strong zero-shot performance on diverse language tasks, ranging from question answering and commonsense reasoning to physical understanding.

They achieved competitive performance when compared with the Transformer ++ architecture used in many popular LLMs such as Llama-2.

It can be noted that the 2.7B MatMul-free LLM outperformed its Transformer++ counterpart on ARC-Challenge and the OpenbookQA benchmark!

Regarding memory efficiency, the MatMul-free LLM demonstrated lower memory usage and latency than Transformer++ across all model sizes.

For the largest model size of 13B parameters, the MatMul-free LLM used only 4.19 GB of GPU memory and had a latency of 695.48 ms, whereas Transformer++ required 48.50 GB of memory and had a latency of 3183.10 ms.

Researchers also compared their Fused BitLinear implementation with the vanilla BitLinear implementation and noted that it improved the LLM training speed by 25.6% and reduced memory consumption by 61.0%!

They then built a custom hardware solution using Field-programmable gate arrays (FPGA) to better perform the ternary operations in the MatMul-free LLMs, further decreasing these model’s power consumption, latency and memory usage.

Notably, their 1.3B parameter model used just 13W of power, achieving human reading speed with an efficiency similar to the power consumption of the human brain!

Finally, when scaling projections for MatMul-free LLMs were obtained, they showed a steeper descent in error than Transformer++.

This means that these LLMs are more efficient in using the additional training computational resources that help improve their performance.

This is quite promising for future MatMul-free LLMs that scale to multi-billion or even trillion parameters.

Due to computational constraints faced by the research team, MatMul-free LLMs have not yet been tested on an extremely large scale and cannot be compared with models with 100B+ parameters (like GPT-4).

But I still consider them a big step towards improving our approach to training and using LLMs without over-relying on powerful GPUs, as is common today.

What are your thoughts about them? Let me know in the comments below.