Learn To Train A Reasoning Model From Scratch Using GRPO

Train the open-source Qwen to a reasoning model using GRPO with Unsloth, which handles all the complicated heavy lifting for you. (10 minutes read)

Reasoning LLMs (also called Large Reasoning Models) are quite popular these days.

These are LLMs that think through a problem well before they answer.

While most early research on training Reasoning LLMs has been kept a “trade secret”, many recent projects have laid this process out in the open (DeepSeek-R1, DeepSeekMath, Kimi-k1.5, and DAPO).

These approaches train LLMs to generate long Chain-of-thought outputs at inference time to reason better.

They also introduce the use of modified RL algorithms, such as GRPO and DAPO, which are efficient upgrades from the original PPO developed at OpenAI.

In this lesson, we will learn about the basics of GRPO (Group Relative Policy Optimization), a popular RL algorithm for training Reasoning LLMs.

Then we will get our hands dirty by writing the code to train a reasoning LLM, learning the process well without any jargon.

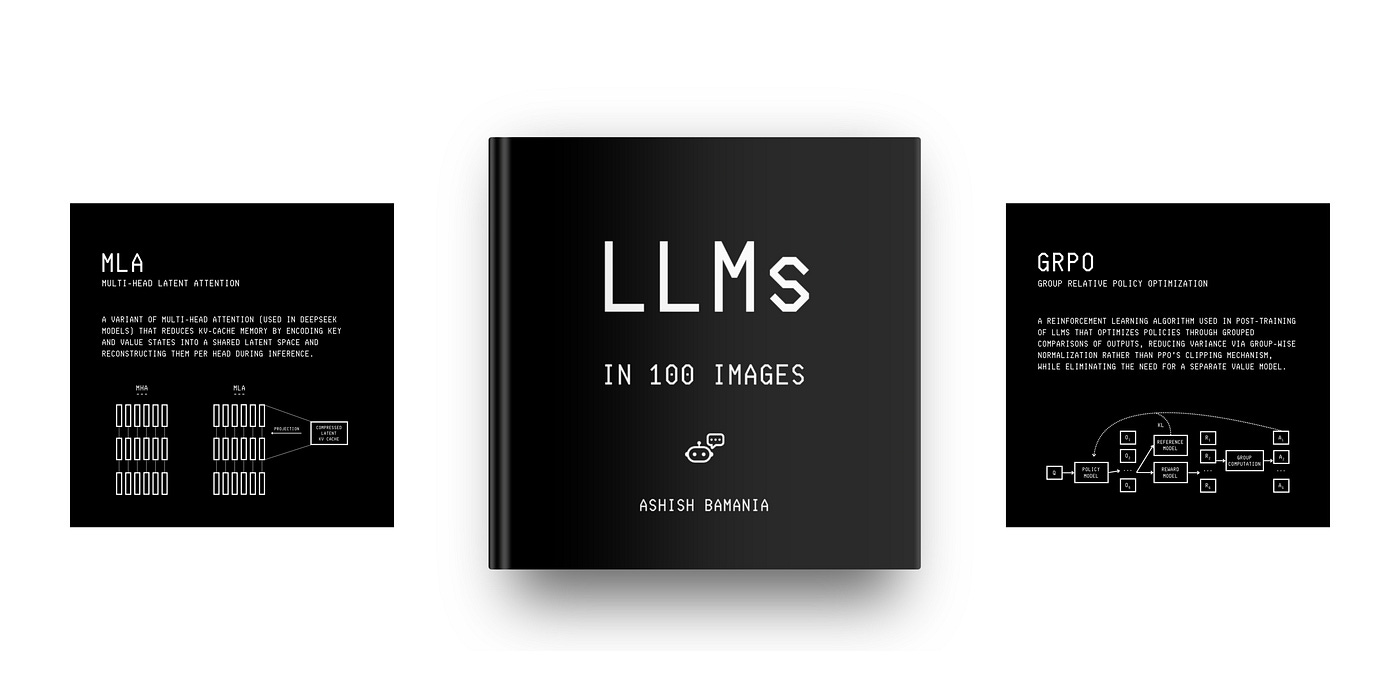

Before we begin, I want to introduce you to my new book called “LLMs In 100 Images”.

It is a collection of 100 easy-to-follow visuals that describe the most important concepts you need to master LLMs today.

Grab your copy today at a special early bird discount using this link.