Vision Transformers: Straight From The Original Research Paper

A deep dive into the Vision Transformer (ViT) architecture that transformed Computer Vision and learning to build one from scratch

The Transformer architecture changed natural language processing forever and became the most popular model for these tasks after 2017.

At this time, Convolutional Neural Networks (CNNs) were found to be most effective for tasks involving Image processing and Computer Vision.

But this was about to change.

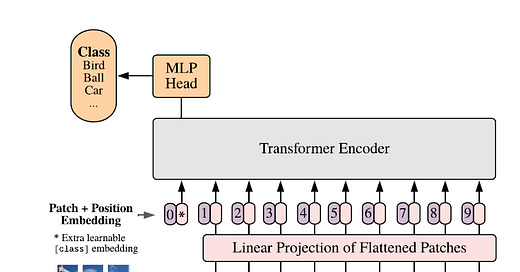

In 2021, a team of researchers from Google Brain published their findings, in which they applied a Transformer directly to the sequences of image patches for image classification tasks.

Their method achieved outstanding results on popular image recognition benchmarks compared to the state-of-the-art CNNs, using significantly fewer computational resources for training.

They called their architecture — Vision Transformer (ViT).

Here’s a story where we explore ViTs from scratch, how they transformed Computer Vision, and learn to build one from scratch directly from the original research paper.

Let’s begin!

Keep reading with a 7-day free trial

Subscribe to Into AI to keep reading this post and get 7 days of free access to the full post archives.