Apple's New Research Shows That LLM Reasoning Is Completely Broken

A deep dive into Apple research that exposes the flawed thinking process in state-of-the-art Reasoning LLMs

Large Reasoning Models (LRMs), or simply called Reasoning LLMs, are becoming quite popular.

These models are specifically trained to take their time and think before they answer, especially when solving tough problems.

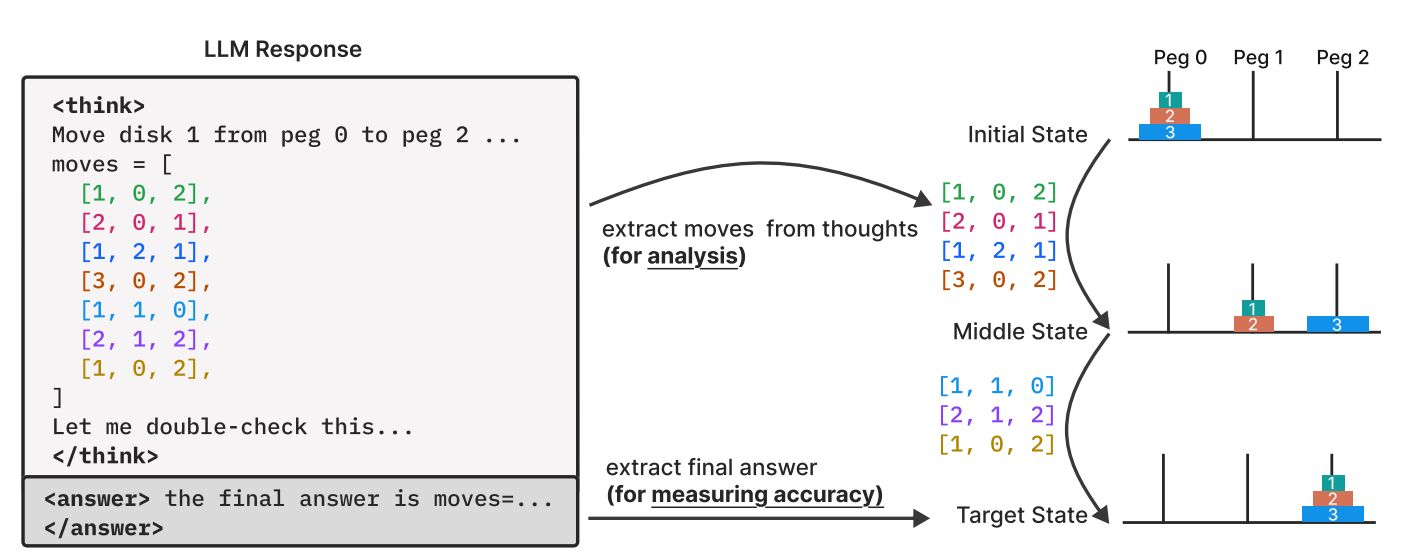

Their thinking mechanism comes from generating a long Chain-of-Thought (CoT) and self-verifying it at inference/ test-time before giving the final answer.

The performance of these models on multiple reasoning benchmarks is very impressive, leading many to believe that we might achieve AGI in just the next few years.

This claim is far from the truth, though.

These are not merely my thoughts, but a recent research paper from Apple has just confirmed this (and this is not the first time Apple has exposed similar lies of the big tech giants).

The research shows that the best reasoning LLMs perform no better (or even worse) than their non-reasoning counterparts on low-complexity tasks and that their accuracy completely collapses beyond a certain problem complexity.

The results also show that reasoning LLMs stop thinking beyond a certain problem complexity level, despite having enough inference-time reasoning tokens to use.

One of the most ridiculous findings is that despite being given the algorithms used to arrive at the solution, these models fail to use them, raising the question of whether they are really capable of thinking when solving a problem.

Here’s a story in which we discuss these results in depth and learn why our current state-of-the-art reasoning models need significant improvements before we begin fantasising about a future with superintelligence around.

But First, What Is Reasoning Anyways?

Reasoning LLMs are trained on a huge amount of long Chain-of-Thought examples (generating intermediate reasoning steps for successfully solving a given complex problem) using supervised fine-tuning or Reinforcement learning.

Such training enables these LLMs to think and reason by using Inference-time or Test-time scaling.

This is when they allocated more computational resources (Thinking/ Reasoning tokens) during inference, leading to better output quality.

Although effective, this training technique isn’t perfect.

It can cause reasoning LLMs overthink on relatively easy problems which means that they produce very verbose and repetitive reasoning traces even after finding a solution.

It has also been found that these LLMs do not even reveal the actual reasoning that they used to arrive at the final answer, making their reasoning trace less trustworthy.

Despite multiple research papers revealing these weaknesses in reasoning LLMs, their performance on reasoning benchmarks always seems to improve.

What’s going wrong here?

Testing Reasoning Models On Puzzles Reveals Their Truth

Most benchmarks that reasoning models are tested on are mathematical problem-solving based.

Because these benchmarks are so widely used, there’s a high chance that their examples are leaked into the training data of reasoning models (Data Contamination).

This could be one of the reasons why all reasoning models perform so well on them.

But what happens when one ditches these benchmarks and evaluates reasoning models on puzzles?

In this research, four puzzles are picked to evaluate reasoning models on. These are:

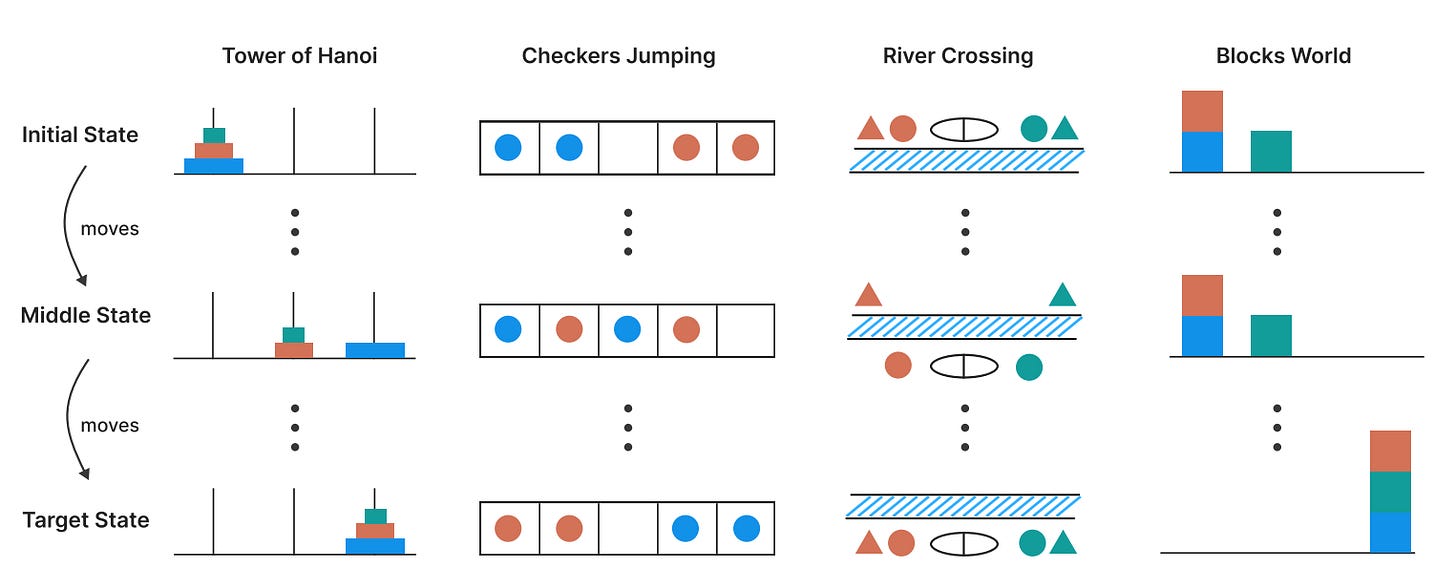

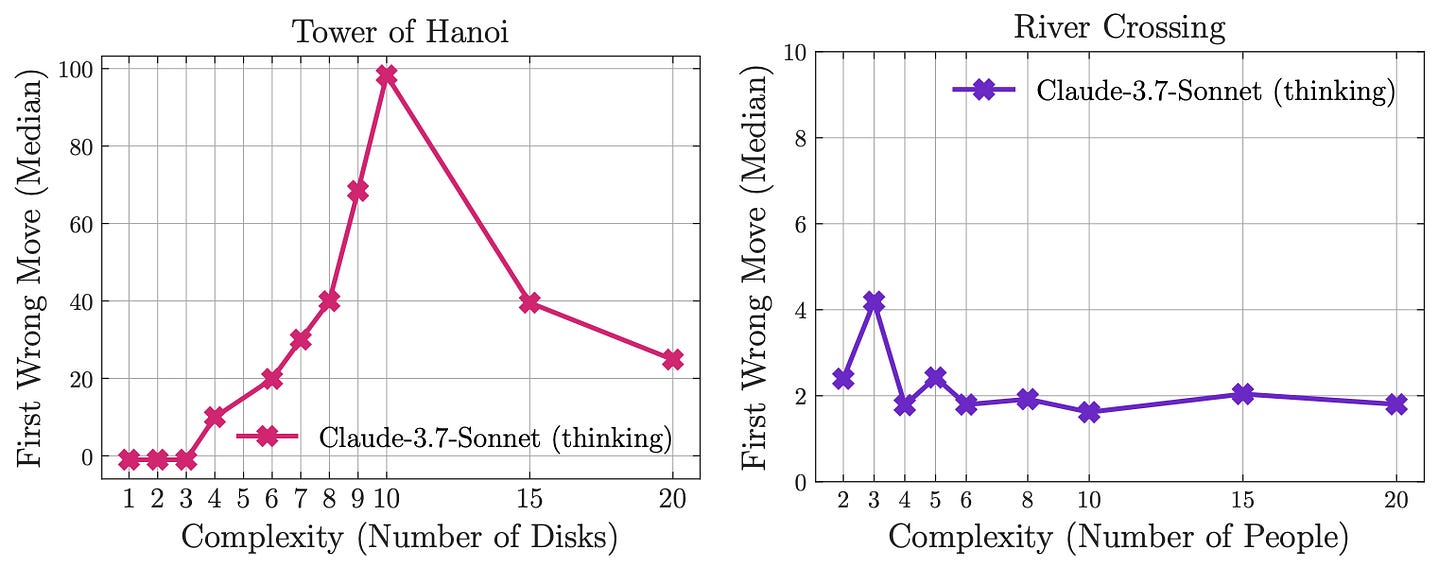

Tower of Hanoi, where disks of different sizes must be moved from one peg to another. The puzzle rules are that only one disk can be moved at a time, and larger disks cannot be placed on smaller ones.

Checker Jumping, where the sides of the blue and red checkers must be swapped in a 1-D array, with one empty space in between. Checkers can move by sliding into the empty space or jumping over one checker of the opposite colour without moving backwards.

River Crossing, where a group of people and their bodyguards need to cross a river using a boat. The boat can only fit a few people at a time, and people cannot be left with someone else’s bodyguard unless their own guard is around as well.

Blocks World, where a stack of blocks needs to be rearranged into a new configuration by moving the block on top of the stack, one at a time.

But why are puzzles specifically picked for evaluation?

There are multiple reasons for this, the first of which is to avoid data contamination, which exists in popular benchmarks.

Secondly, it is easier to change the complexity of puzzles in a controlled and quantifiable way. This is usually not possible with math problems.

Next, with puzzles, the model’s intermediate reasoning towards solving them could be easily studied because the puzzle solution is a step-by-step logical process. In math, checking reasoning steps is hard because the solution paths usually vary.

Finally, with puzzles, simulating and precisely checking the logical steps and answers for correctness is easy.

Now, it’s time for the results!

Reasoning Models’ Intelligence Depends On The Problem They Solve

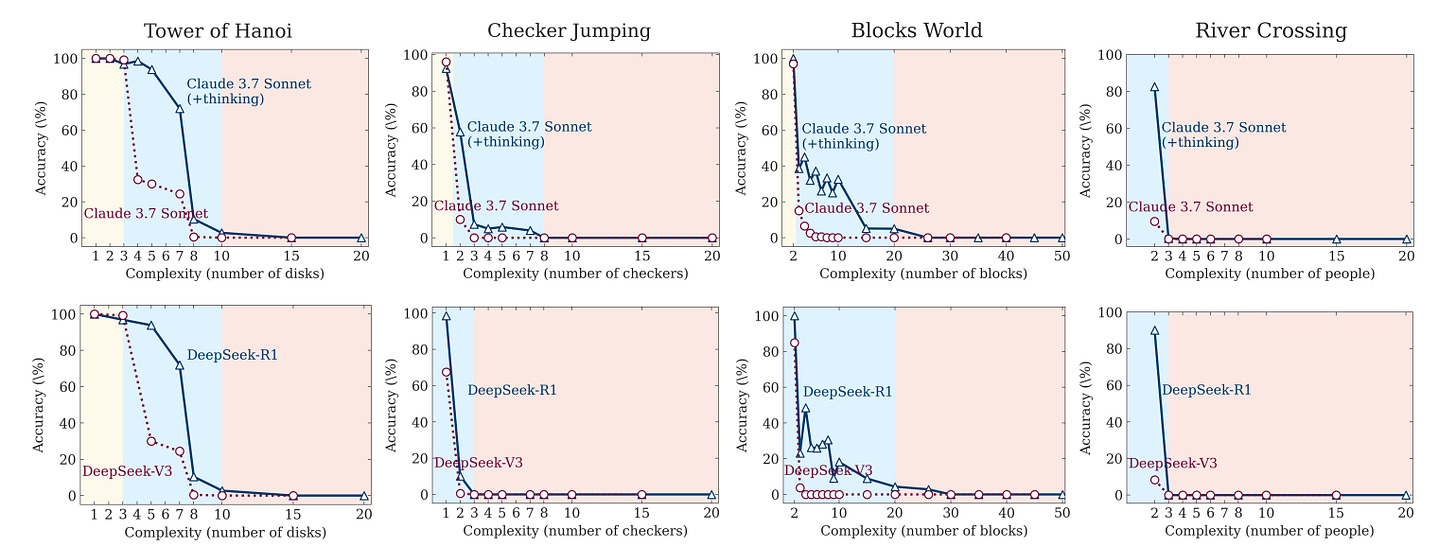

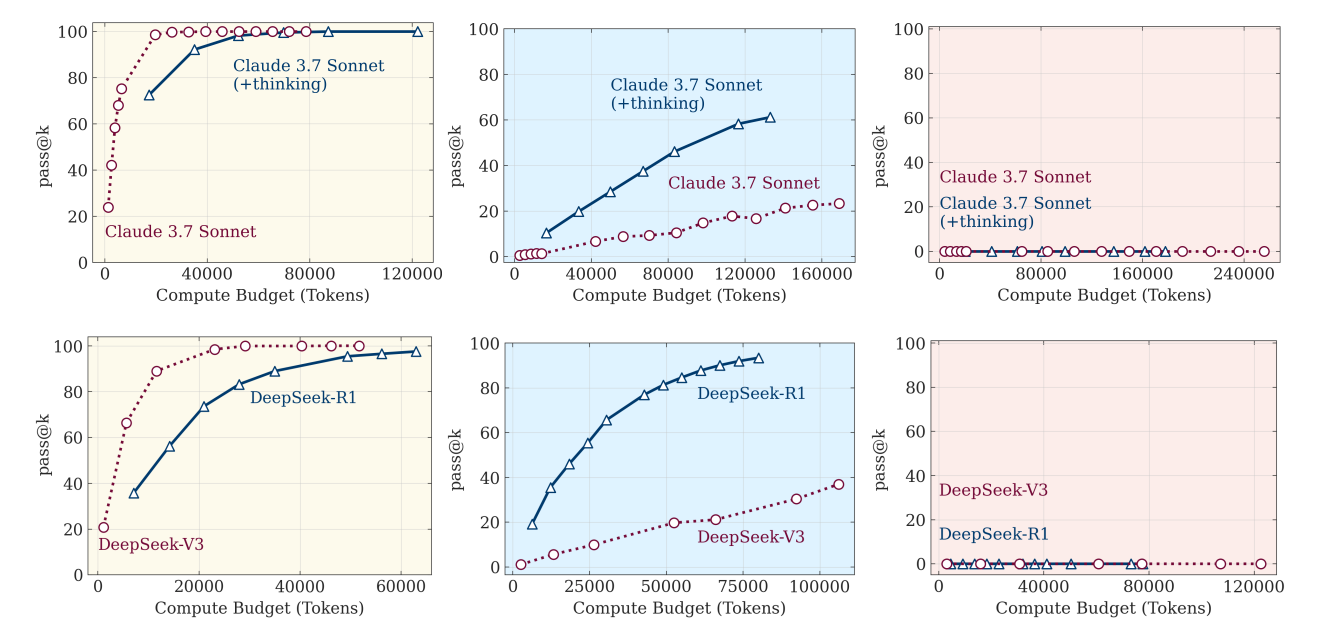

Results show that reasoning models are not always better than non-reasoning models for problem solving.

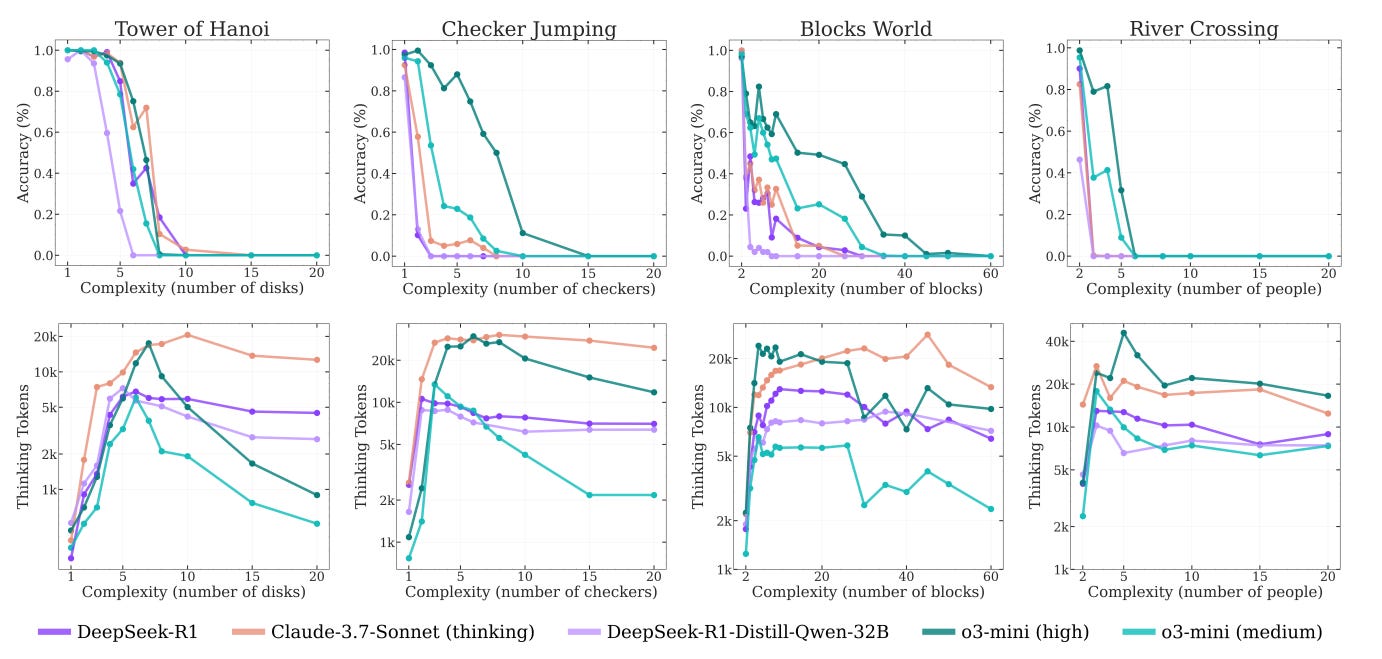

When a puzzle’s complexity is changed by changing its size N (which means the disk count, checker count, number of crossing elements, or block count, for each puzzle, respectively), reasoning models behave differently for different levels of complexity.

For easy problems, they perform at par or even worse than their non-reasoning counterparts.

For problems of medium complexity, they do better than the non-reasoning ones (thanks to the long CoT they produce).

For high-complexity problems, both fail to solve them, despite the reasoning models allocating more inference-time tokens.

Reasoning Models Get Lazy When They Are Given A Hard Problem To Solve

Reasoning models completely collapse when they reach a certain model-specific threshold of problem complexity.

They must ideally increase their reasoning tokens proportionally with the problem complexity.

But instead of trying harder, they reduce their reasoning effort (or produce fewer reasoning tokens) near the point where their accuracy starts collapsing, and thereafter.

This limits their inference-time scaling ability, as should have been the case with rising problem complexity.

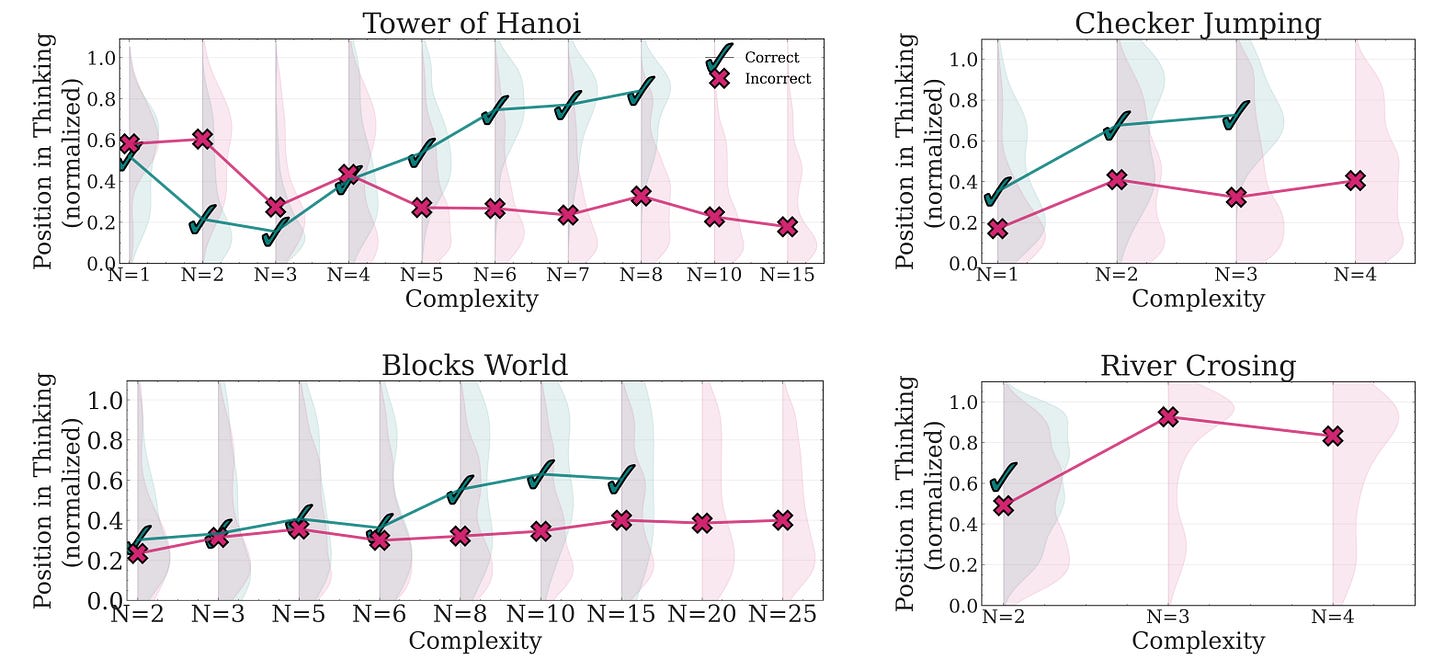

Frontier Reasoning LLMs Still Haven’t Stopped Overthinking

When reasoning traces of Claude-3.7-Sonnet-Thinking are looked into, it is noted that even though a correct solution is found early in the reasoning trace, it continues to explore the incorrect solutions (a behaviour called ‘Overthinking’).

For moderately complex problems, Claude first explores incorrect solutions, finally arriving at the correct solution later in its reasoning trace.

However, as problems get harder, its performance drops to zero (called ‘Reasoning collapse’), no matter how long its reasoning trace is.

The Most Surprising Result Awaits At The End

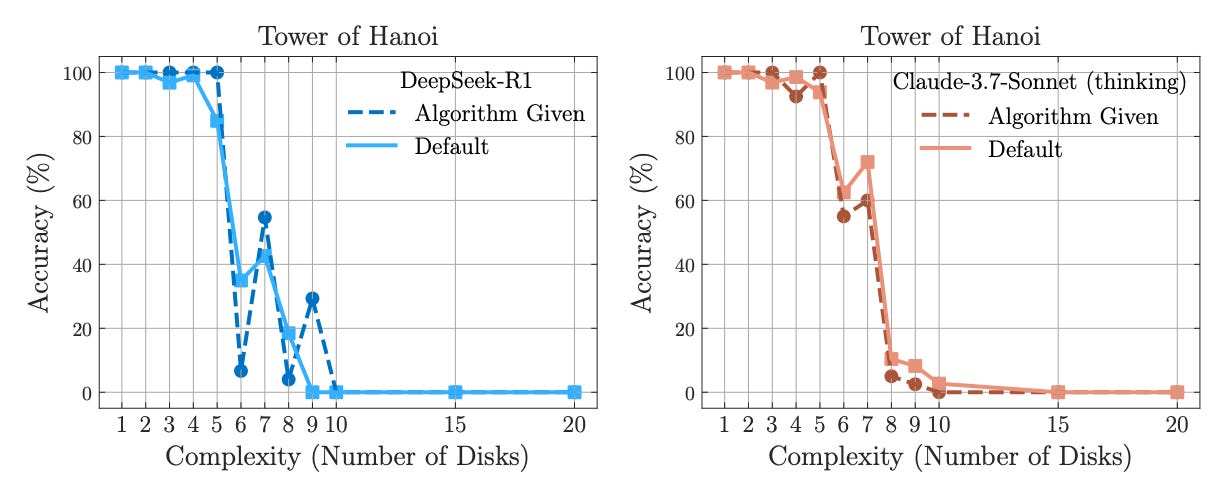

What happens when the reasoning model is given the exact algorithm needed to solve the Tower of Hanoi puzzle?

You’d think the model would use it and drastically improve its performance since now it does not need to search and verify the solution, but simply execute the algorithm to find the answer.

Surprisingly, this does not happen, and reasoning collapse occurs roughly at the same point as when the algorithm is not revealed to the model.

This shows that reasoning models cannot even follow known logical steps to correctly solve a problem, pointing towards their weak symbolic execution and verification skills.

Claude-3.7-Sonnet also better solves the Tower of Hanoi puzzle but fails within just a few moves in the easier River Crossing puzzle.

This is because the River Crossing problem is not very popular on the web and might not have formed part of the training data that Claude has seen before.

We are quite far away from AGI. Should we even talk about it when we have not solved the basics of intelligence in LLMs?

What are your thoughts on it? Let me know in the comments below.

Further Reading

Author’s article titled ‘LLMs Don’t Really Understand Math’ published in ‘Into AI’

Author’s article titled ‘You Don’t Need ‘Thinking’ In LLMs To Reason Better’ published in ‘Into AI’

Research paper titled ‘Reasoning Models Don’t Always Say What They Think’ published by Anthropic

Source Of Images

All images used in the article are created by the author or obtained from the original research paper unless stated otherwise.